Great news ! Waiting for you to come back then !

If by any chance you have a linux system, I encourage you to use Agade’s Runner Arena : https://github.com/Agade09/CSB-Runner-Arena. It’s a great way to monitor progress for your Neural Networks.

Great news ! Waiting for you to come back then !

If by any chance you have a linux system, I encourage you to use Agade’s Runner Arena : https://github.com/Agade09/CSB-Runner-Arena. It’s a great way to monitor progress for your Neural Networks.

You could be interested in the article called Importance of Machine Learning Applications in Various Spheres

I made a chatbot using a Neural Network. I basically copy pasted this tutorial and worked from there.

Architecture

It uses a Gated Recurrent Unit (GRU) which is a type of Recurrent Neural Network (RNN) to “eat” the characters in the chat one by one and output what it thinks the next character should be. I trained three versions on the world, fr and ru channels by teaching it to predict the respective chat logs in the format “username:text” (timestamp removed). Then whenever the bot is triggered to speak I feed it “Bot_Name:” and it generates a sentence character by character which I push onto the chat. The network doesn’t actually generate characters but probabilities for the next character, a categorical distribution is then used to select the next character.

Motivation: Automaton2000

One motivation I had for this project apart from personal interest was to help bring back Automaton2000. Automaton2000 was taking too much RAM on Magus’ cheap dedicated server (1Go RAM). The markov chain of all possible words had exceeded that limit. I made Magus a variable-length Markov chain C++ version (standalone and daemon) that consumes less RAM but it still easily exceeds 1Go. Magus took that work and made it consume even less RAM (github) but I suppose it wasn’t good enough. He also tried to store the tree on the disk instead of RAM but it was too slow. A Neural Network seemed like the ultimate solution against RAM consumption because even large NNs may only take ~100Mo worth of weights (e.g: keras applications networks for image classification). Potentially a NN could also take context into account, like answering people’s names and talking about the subject of the ongoing conversation which a Markov chain cannot.

The training consumes a lot of RAM (GPU VRAM actually…training takes ~2 hours per channel on a 1070) but the inference can be done on CPU and the bot currently consumes roughly ~300Mo of RAM + ~20Mo for each channel. It is a bit slow to generate sentences character by character taking up to a second on my computer and several seconds on Magus’ server. Automaton2000 is currently back using this work.

Issues

One thing that is quite noticeable is the overuse of common words and phrases. One version liked to say “merde” a lot on the french channel. I guess the network just learns what is most common.

Future work (including suggestion from @inoryy)

The C++ version was bugged  It can’t work like that.

It can’t work like that.

I think i’ll give a last hope shot with a noSQL database.

This isn’t directly related to CG, but the question of getting started with reinforcement learning gets brought up a lot, so figured I’d share my new blog post Deep Reinforcement Learning with TensorFlow 2.0.

In the blog post I give an overview of features in the upcoming major TensorFlow 2.0 release by implementing a popular DRL algorithm (A2C) from scratch. While focus is on TensorFlow, I tried to make the blog post self-contained and accessible with regards to DRL theory, with a birds eye overview of methods involved.

Agade, I’m interested to apply q-learning to the problem also. I’ve been assuming that you must extract data from the system to do training off-line and then inject the learned weights and models. I haven’t seen a way to get data out of the system. However, reading your write-up above it almost sounds like you are learning on-line. Is that the case?

@algae, you don’t need to extract data out of the system. You can play offline games with a simulator which is close enough to the real game, which is what I and @pb4 do. For example to train a NN which controls a runner you just need a simulator which moves pods and passes checkpoints (no need to simulate with >1 pods and pod collisions).

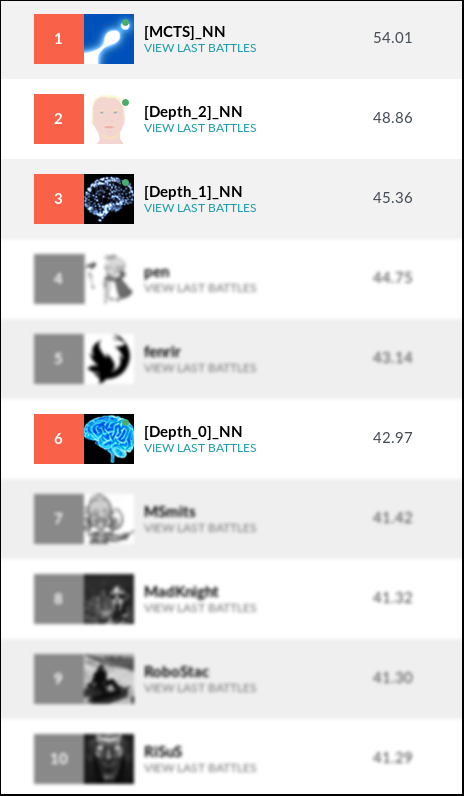

As some of you know, @pb4 and I have been working on a NN based reinforcement learning AI on CSB. We have finished a write-up and are pleased to present Mastering Coders Strike Back with Nash-DQN.

Thank you for this gem.

I have some questions as I’m not quite as intelligent as you two:

You train the runner because you have a training set with good trajectories to pass checkpoints.

But on which training set can you train the blocker ?

I don’t get the difference between what you call depth 0 and depth 1 implementation.

Just to be sure, online on codingame the neural network is simply an evaluation function, right ?

What are the one or two best tutorials you ever did on Q-learning ?

I’m sure more will come from me or others

Thanks !

Hi,

I made the runner dataset a long time ago, and never bothered to make one for a blocker. Those datasets would only be applied to supervised learning, which is a different approach compared to the Q-Learning described in the article.

Depth 0 : Read the state. Feed it to the neural network. The neural network has 49 outputs corresponding to one pair of actions each. Use the iterative matrix-game solver algorithm to find the optimal mixed strategy for our bot.

Depth 1 : Simulate all possible actions by my pod and the opponent. For each pair of actions : feed the simulated state to the network, read the output of the network and calculate the expected future rewards, this is the evaluation of the pair of actions. Upon constructing the table which evaluates all pairs of actions, use the iterative matrix-game solver algorithm linked in the article to find the optimal mixed strategy for our bot.

Pretty much, yes. We use it to evaluate actions or a state depending on the algorithm in which it is plugged (depth 0, depth 1, depth 2, MCTS).

Not really a tutorial, but I liked this one.

Don’t hesitate if you have anymore questions !

Thank you !

Hey I saw the link ! Cool I’m on the right rack

So on depth 1, 2 or MCTS, the NN always has 49 output ?

The part where we train the NN is totally unrelated to the part where we use the NN.

So yes: in all different versions (depth 0, 1, 2, MCTS) the same NN was used, with the same 49 outputs.

Hi,

My current bot for CSB is also a NN but I used a different approach than Agade and pb4.

My NN directly outputs a joint policy for both of my bots (6 choices for each bots, so 6x6 for the joint policy) and I sample this policy at each turn. I have no search algorithm on top of it. I do only one NN evaluation per turn.

For the NN training, I used a policy gradient algorithm: A2C. A few points:

For the rewards:

My NN has 80 inputs, 1 + 36 outputs, 4 hidden layers using leaky relu (1 common + 3 per output head), tanh for the value, and softmax for the policy.

To have a submitted bot below 100k, I do a final training pass using weight quantization down to 7 bits with a quantization ‘aware’ gradient descent with a reduced learning rate and entropy.

It allows shrinking the NN from ~200k of binary floats to a base85 encoded string of ~60k used by my code (in C) while keeping a quite good accuracy

Good game to Agade and pb4 and thanks a lot for your article. I did tried multiple times to do a Nash/CE Q learning but I never succeeded in achieving anything converging.

Don’t hesitate if you have any questions.

For Pb4, Agade and Fenrir,

What do you use for the NN: python, C++, Caffee, Tensorflow, etc ?

@pb4 and I used C++ without libraries. From speaking with Fenrir I believe he as well.

I made a working version of my double-runner Q learning bot in Python+Tensorflow last year but I was not able to get reasonable performance, it was orders of magnitude slower than my C++. I surely did not code it optimally though.

Okay !

So the first step is not a Q learning tutorial, but a “Write your own kickass NN in C++” tutorial

If it’s a learning experiment, do write your own NN code. You’ll know it in and out, and be more comfortable adapting it. The drawbacks are that you’ll have to make sure it’s not bugged and you can’t easily use “fancy” stuff such as dropout, multi-head networks, etc…

One thing I wish I had known : you’ll find much more stuff with which to start in Python. See for example, state of the art DQN ready-made implementation: https://github.com/Kaixhin/Rainbow

I wish I could compare my DQN implementation to Rainbow…

For learning purposes, I wrote my own NN code (in C). One of the drawbacks is that it is difficult to be sure to have something without too much bugs, NN are quite resilient to buggy code (but with sub optimal results)…

For those interested, here is my implementation of a neural network in C++ https://github.com/jolindien-git/Neural-Networks

This remains a beta version (so possibly with bugs) not optimized. But it seems to work correctly with the few examples I have tested.