Hi,

I finished #5 overall, here is a description of my AI :

#Introduction

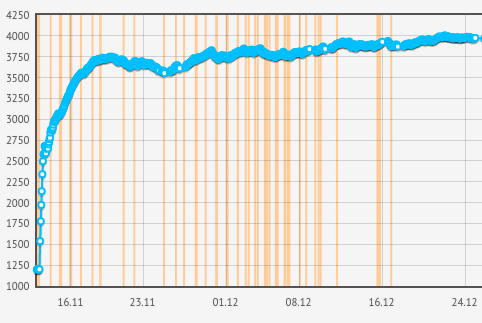

I started the contest one week after it started. At first glance I was scared that this will be a GITC bis, i.e. a contest in which a pure heuristic beats everything else. After a longer look at the top games, it appears that this wasn’t the case since unlike GITC, there is a quick and direct interaction between the two opponents. R4N4R4M4 proved that heuristics belong to the top too, but it seemed that the meta would revovlve around simulation-based solutions.

Almost every multiplayer game can fit in two main categories, PvE and PvP games.

PvP : TRON, CSB, WW, …

PvE : CR, STC, HS, …

For the first category, minmax seems to be the right choice of algorithm, whereas GA/MC/SA fits better the second one. There are exceptions but it’s often the best choice imho.

This game belongs in the PvP category because of the previously mentionned direct and close interaction between the two players; I then decided to use minmax.

#Move selection

The branching factor of the game is pretty huge and I spent a few days trying to figure out how to select the valid moves to simulate; you obviously can’t simulate every possible scenario, even at depth 1.

Looking at a few games in the leaderboard I noticed that Robostac was only playing 1/4 or 5/0 moves, and nothing else. This seemed to work well (he had first place at the time) so I did the same.

The first version of my minmax would select the N best planets based on a score computed with a heuristic, and simulates every possible 1/4 5/0 move on those planets, N being a constant. This kind of worked but the selection was still perfectible; N too small and I would miss some crucial moves, N too big and I would be stuck at depth 1.

I switched back and forth between 2 approaches :

Generate lots of moves and stay depth 1

Agade told me that he was using iterative widening; he keeps his minmax capped at depth 1, but iteratively widen the selected moves. I liked this idea and stole it (thanks). I would use the same principle as before, increasing the N value to take more and more planets into account each time, stopping when I run out of time, and keeping the last complete result.

Generate the fewer possible moves and reach depth 2 as many times as possible

I noticed that Petra was pruning even harder and only played 5/0 moves. I tried that too, and that would allow my AI to reach depth 2 or 3 quite often, even though in some scenarios it wouldn’t allow me to colonize the map as fast as the enemy.

Final move generation

Both approaches had pros and cons, but the move selection that worked better was :

Select all the planets that are worth simulating : every reachable neutral, every reachable enemy planet, every owned planet that is in direct contact with an enemy planet.

If more than 15 planets are selected, sort them by score (based on number of owned neigbors, and pathThrough ratio, which is explained in the next part) and keep the first 15 ones.

Generate all 1/4 4/1 2/3 3/2 5/0 moves on those.

That solution didn’t allowed me to reach depth 2 often but in the end it seems that reaching depth 2 wasn’t as important as simulating a bigger subset of moves.

I also used killer-move and (delta)hash-move to prune my alpha-beta in a more efficient way.

#Evaluation

The map is a graph and not every planet is worth the same.

The metric I used for that was the pathThroughRatio.

For every planet, I first compute the pathThrough. It is the number of times a planet is on the shortest path between two other planets.

I then normalize this number; i.e. I compute a [0; 1] ratio for every planet such as :

planet.pathThroughRatio = planet.pathThrough / biggestPathThrough

I also used Voronoi to count how many neutrals were closer to me than to the enemy.

Using both those metrics, the evaluation function is quite simple :

for (Planet planet : planets) {

score += (1 + 2 * planet.passThroughRatio) * ((planet.tolerance[me] - planet.tolerance[him]) + (planet.units[me] - planet.units[him]));

if (!neutral) {

score += (1 + 2 * planet.passThroughRatio) * ownBonus * (mine ? 1 : -1);

}

}

score += 10 * (voronoi[me] - voronoi[him]);

ownBonus is the weight I give on owning a planet. Its value is 10 the whole game, and is progressively raised to a max of 1000 during the last 10 turns.

…, 10, 10, 100, 200, 300, …, 1000

#Choke contest

A large portion of the maps (I’d say half of them, but I haven’t measured) are based on a choke contest. On these maps, each player starts in the corner of a semi-symetrical map, and a large part of the map is only accessible through a single planet :

In this exemple, 14 is the choke, and the first player to own that planet will most likely win the game, since it will allow him to colonize the top part of the map.

The first played moves on this kind of map are crucial; a suboptimal move can lead to a 1 tolerance difference or a 1-turn delayed spread and the loss of this planet.

My initial evaluation function cannot detect those optimal moves so I added a small part dedicated to these special cases :

First, detect these choke planets at the first turn; it’s the articulation point (i.e. remove the planet and the graph is no longer connected) that is equidistant to both player starting positions. If multiple planets are eligible, take the one that is closer to the start positions.

In the evaluation, I added :

if (planet.isArticulation && neutral) { // choke contest

int chokeDiff = 0;

for (Planet otherPlanet : planet.neigbhorsOrderedByDistance) {

int distance = distance(planet, otherPlanet);

if (distance > 3) break; // only considers the planets in a radius of 3

if (otherPlanet.owner == me) {

chokeDiff += (otherPlanet.units[me] + 6 * otherPlanet.tolerance[me]) * reinforcementBonusPerD[distance];

} else if (otherPlanet.owner == him) {

chokeDiff -= (otherPlanet.units[him] + 6 * otherPlanet.tolerance[him]) * reinforcementBonusPerD[distance];

}

}

score += chokeDiff;

}

[...]

const array<int, 4> reinforcementBonusPerD = {0, 100, 10, 1};

The reinforcementBonusPerD is meant to give more value to 1 unit at distance 1 than 5 at distance 2. That way, my AI gathers units close to the choke by spreading neighbors, allowing a future spread towards the choke, etc.

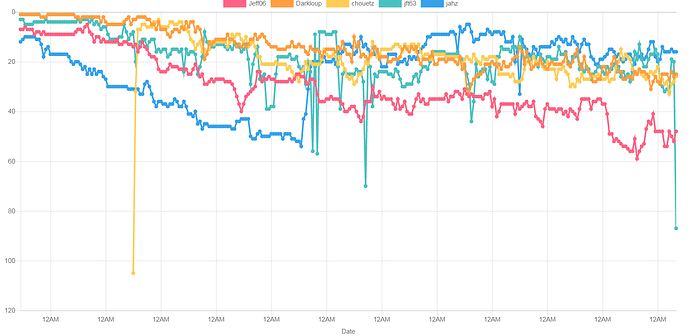

This tiny piece of evaluation is worth a lot of ELO (the map pool was unbalanced imo).

#Conclusion

Very interesting game that shows that deep games do not need thousands of mechanics and a 10 pages statement (yeah, I’m talking about the past 3 CC, even though I know that designing a game is really hard). Congrats on the design CG-team !

Two bad points though :

The lack of rerun. Two weeks of work ranked on such a small number of games is painful, and demotivating. There’s a big chance that this will always be that way for semi-private contests, but still, it hurts.

AI hiding. Please fix this, this is getting ridiculous. Every IDE game replay is already saved, just make them public, I’m sure the community will be ready to develop his own tools to explore/filter these if that costs too much on your side.

Thanks !

(well done btw Adrien for the tool)

(well done btw Adrien for the tool) ).

).

with a minmax.

with a minmax.