k4ng0u - 25th in Legend (Python 3).

This was my second contest, the first one being CalM. I really enjoyed the fact that the rules were simple and thanks to this it was easy to understand the different strategies and their flaws.

The starter kit was really (too?) well done as it split the phases of a turn (read inputs and update game state,train, move,output result) and had basic implementations for each phase. Even in my final code I more or less kept the same phases.

To get started I made my units move to opponent HQ instead of the map center and this instantly sent me to wood 3.

A flood fill algorithm to train and move units pushed me to Bronze by Friday evening. At this point I went on working on my bot even though I think that a good floodfill algorithm might reach silver league without towers/level 2-3 units.

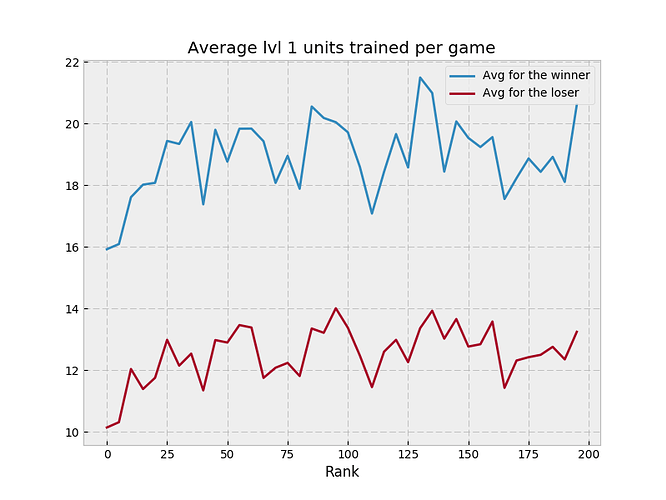

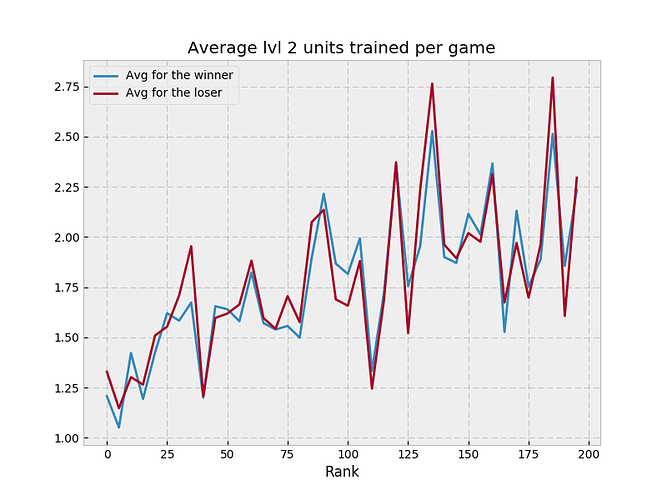

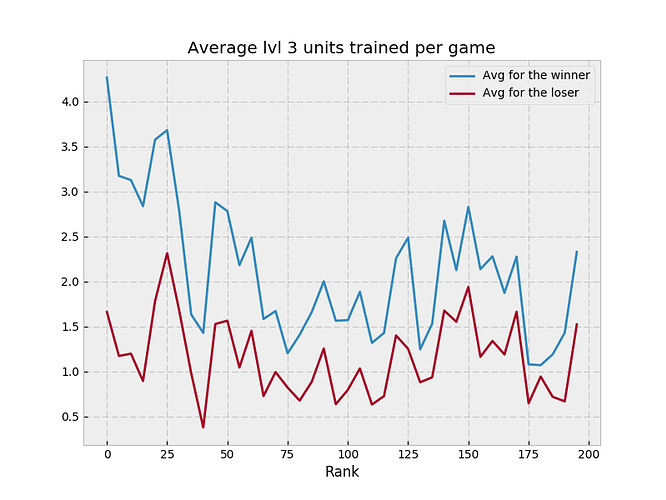

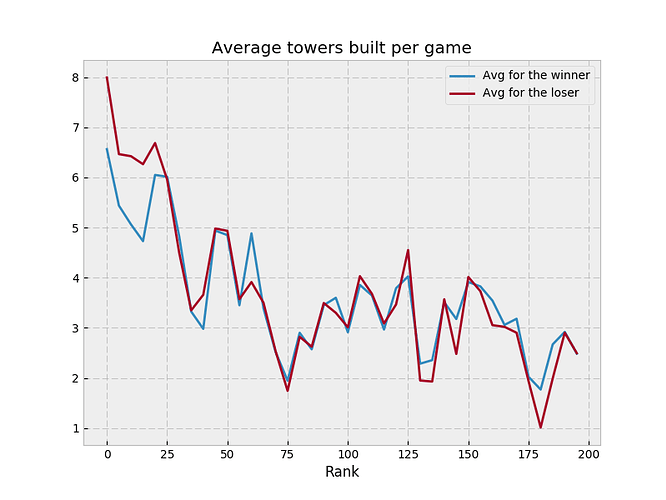

My next “improvement” was to build level2-3 units to destroy ennemy units whenever they are close to my territory. This didn’t work too well as it depleted my gold and reduced my income so drastically that I wasn’t able to do anything as long as the costly units were standing. This made me realize the (over)power of towers (cheap and no upkeep). So I decided to put towers on all cells with a ditance of 2 from my ennemy border. With this, my bot was a solid top 50 and reached Silver league.

After that my bot slowly dropped in the ranking. This is when I noticed that I was losing a few games I was totally dominating in terms of territory. This was due to what I call the “finisher” move (and the fact that my bot didn’t know how to handle area protected by turrets). Some bots were saving gold to spend it all in one turn and finish the game without caring about upkeeps. While stalling the game isn’t always good, being able to know how much gold is needed to win in one turn is quite interesting. I used a djisktra from my hq taking into account the cost to access each cell: 0 for my cells, 10 for an empty cell, 20 for a cell with an ennemy unit lv1, 30 for a cell with an ennemy unit lv2/3 or protected by a tower. I had a protection dict to indicate for each cell how many towers were protecting it. During the dijsktra, each time a cell was visited, if it was a tower, I updated the protection dict associated to the path so that the cost of the next moves takes the tower destruction into account. The path computation takes an underwhelming 0.8ms. I wonder if my algorithm is particularly bad of if it’s just Python being very slow. Anyway thanks to the finisher move, I was now winning most of the games where stalling was involved.

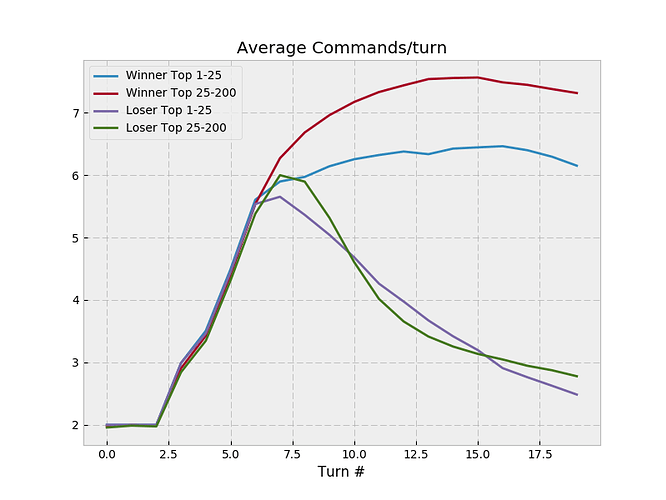

On gold league opening, my game phases were as follows and it never changed afterwards:

_Move units (floodfill, try to avoid blocking other units)

_Play Finisher (if we can finish the game, finish it)

_Check if game can be finished next turn (based on opp hq distance to mine, my gold, and my income). If it can, skip the next phases (save money!)

_Play cuts (my initial basic implementation was just to train lvl3 units on top of opponent lvl3 units)

_Build towers

_Train units

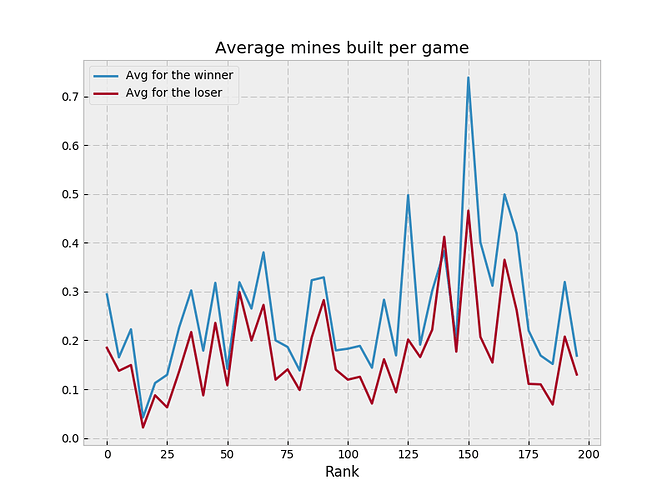

_Build mines (build mines closest to my hq with the gold left if my income is less than the opponent income)

From Wednsday to Friday my ranking dropped between 70 and 110. I had 3 points that were really not going well:

1)cuts => many opponents were cutting my territory but I didn’t cut theirs.

2)floodfill => my oppponents were consistently spreading further than me.

3)tower building => Building towers on cells at distance 2 from ennemy border was wasting way too much money.

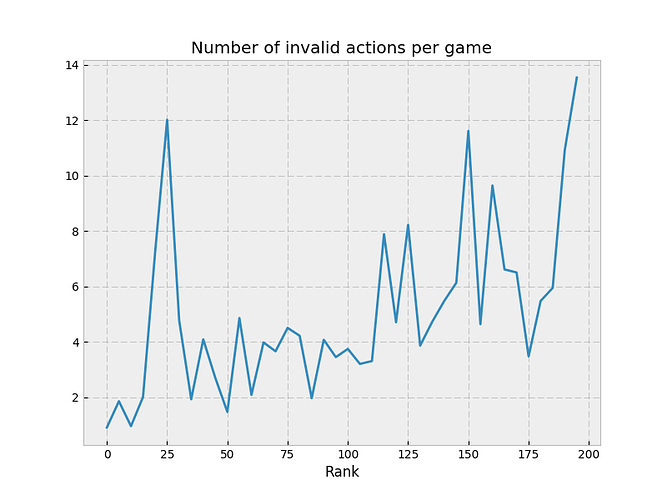

I wasn’t much motivated because I had no clue on how to improve point 2 and 3, and for point 1 given the computation time my diksjtra took, I wasn’t sure I could get enough depth to have meaningful cuts.

I tried to to study the top player games. But it was nearly impossible to understand why they did a specific action (the moves computed by simulations are not always deterministic and even if they are it’s not always easy to understand that a move is good because it will be interesting 3 turns later). This is when the legend league opened and the Broken Boss saved me. His moves were quite easy to predict (or at least it gave me good information on what type of gameplay I should use):

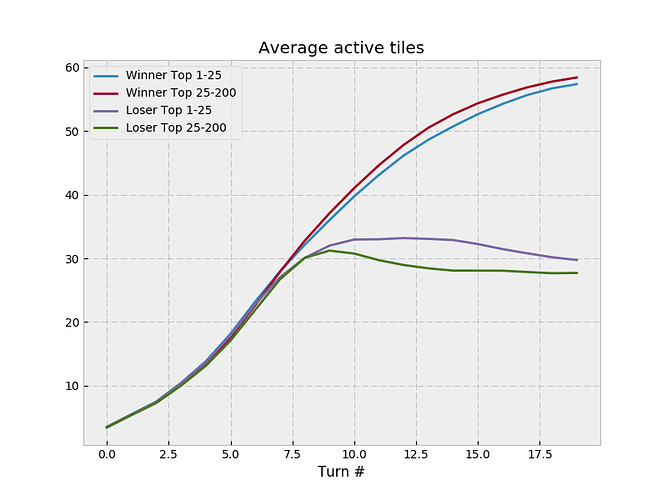

_move/train: always go to the tile that is nearest to opponent hq, in case of multiple tiles at equal distance, alternate priority to right and down (or left and up when we are blue). This would guarantee a fast expansion to the center and spread your territory at the same time.

_tower building: only build tower to protect cells that are in danger on the border. A cell is in danger if it’s not protected and if it’s empty or if the adjacent opponent unit can freely move onto your cell. If opponent gold+income>=30 all cells on the border are in danger.

With this I thought that maybe if I manage to cut plus strenghten my weaknesses with the boss strategy I could climb up a few ranks.

I started with the move/train expansion improvement that was relatively easy to implement. It was already 20-30 ranks gained.

Then I moved on the cut which gave me a hard time. I don’t know any algorithm to efficiently cut graphs. So what I did is to bruteforce all possible paths starting from ennemy cells that are adjacent to my territory with a depth of 4 (This was pruning a lot of interesting moves starting from neutral cells but it is all I could afford without timing out). Then I would compute the ennemy score and my score (based on constants, number of tiles owned, number of units, income), how much the cut cost me and the upkeep needed for the chain. With a mix of all this I tried to determine if it was worth cutting. The advantage of computing a score based on territory of both the opponent and me is that the cut algorithm also naturally handles reconnection to big blocks of inactive cells. I tried to adjust the constants a bit. But due to the weird mixing of information (income, gold, nb of units, unit levels), my cuts are still sometimes over aggressive or very defensive (particularly afraid of using lvl3 or cut an opponent lvl3 since it’s a 20gold income shift). But it still made me raise into the top 5 of the gold league.

My last change was turret management. So far I was filling the map with towers once I reached ennemy territory. I changed it into the Broken Boss strategy. It helped me a lot during the expansion phase and was a game changer. I was now only building necessary towers to protect the front line and using the rest of the money to expand my territory in early game. That and a few bug fixes made me pass to legend!

Many thanks to Azkellas and Tehelka, it was really an entertaining contest! I found it particularly pleasant that even without using heavy computations we could make a relevant bot. I would try to learn C++ for the next contest though as it’s a little bit frustrating to restrain from some possibilities because the language is too slow (and I’d like to check if my code is slow because of the language or the one who uses it :p). It would be quite interesting to know how the other Python programmers handled the cuts and path computation/scoring. For me it was definitely the bottleneck of my computation time. It would be nice also to have a story of the game conception, how it evolved and how Azkellas and Tehelka came out with such a strong Broken Boss!

so I’m going to copy MSmits’ format here

so I’m going to copy MSmits’ format here  I’m pretty new to Rust, and fell prey to excessive cloning, which is well described by someone else here:

I’m pretty new to Rust, and fell prey to excessive cloning, which is well described by someone else here: