Finished 5th.

About the contest

I really enjoyed the contest. The rules are simple, yet the game has enough depth to keep one busy for a weekend. But not much longer, so the duration was well chosen too. Thanks to Magus and Agade for creating it.

I appreciate the rerun with different validators as during the contest, as it prevents from hardcoding, reversing validators, testing different random seeds or just spamming submits. The only downside is that it will turn out to be random with only 30 testcases, half of which can and will be solved optimally by most top players. More testcases or even running the same tests multiple times would help here.

Approach

I prefill the board to prevent myself from falling down the edge. Other than that I keep the map unchanged.

Then I modify random fields, add, remove and redict arrows as long as the timelimit allows. For each mutation I only change 1 to 3 arrows and keep the changes if they are as good as my previous best (or even slightly worse not to get stuck in a local optimum too easily).

I rerun the code 4 times during the 1s, starting with my initial fill again. For the first third of the time of each run I only use one robot to find a long path, as that is usually good for the others too (and faster to evaluate).

The scoring is quite simple, just take the score that the game would give you.

My simulation is slow compared to others (about 125k on test 30, despite only using one robot for a while).

Allowed placements and heuristics

There are some cases where you can exclude placement options to reduce the search space:

For long corridors there’s no point in adding arrows. Either they direct you the way you already go, or they make things worse (pushing you down the edge, shortening a loop).

![]()

Arrows pointing to each other aren’t an option either, as that will result in an instant loop and end the run. Same goes for pointing on a void cell.

During the simulation I also allow my first bot in the movement order to rearrange arrows, if that avoids doing a 180 degree turn (as these always result in a loop).

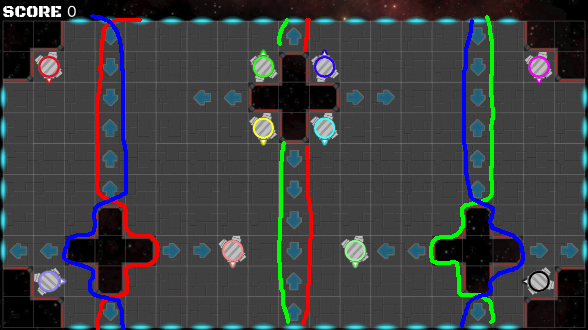

When you watch a replay with high points (sorry, I don’t have a better one), you will notice, that the most arrows are placed at the border, while the center is mostly empty (these cells can be traversed twice and give two points).

So we can take that into account when changing an arrow: if the cell we want to modify has an arrow on it an there is no preset arrow or void cell next, the most likely action is to remove the arrow in the mutation (I do that in 75% of the cases).

Otherwise take a random allowed arrow (without the excluded directions from above). If it’s the same as before, just remove the arrow.

Components

Some maps are split into several components:

To detect them, start a breadth (or depth) first search from each robot and keep track of the reachable cells (preset arrows only have one neighbor cell of course).

If two robots share any empty cells, they are in the same component (even if they can’t reach each other’s starting position), otherwise they are not.

The components may overlap, as shown above, but only on cells with preset arrows.

Different components can be optimized independently from each other. That will give a higher score, as you don’t try to modify two components at once and drop the score with a bad change in on of them, even if you improved on the other.