First, sorry for the late PM, I stayed up coding and packing the whole last night of the contest, before flying to California the next day

The first night I wrote a simple heuristic in Java to get to Bronze. It actually got around #10 the first night, which felt nice. However I knew this was very preliminary and symbolic, and I was sure I would go for a search algorithm.

I went fist for a Monte Carlo simulation, for simplicity while implementing the engine, planning to switch to MCTS later. The alternative heuristics (“if forest”) approach was especially easy and strong in this contest, so it was not easy to get a strong search bot early on, as many people who tried know, needing in particular to spend time crafting a good eval function. But I had something decent after a few days, and I was relatively confident that in the end it would be easier to keep improving, while the if forests would become increasingly hard to maintain. There was still a doubt about which approach would be best though, and I think this is something great about this contest.

The next step was to switch to MCTS. I got something working, but it was not so clearly better, as in some cases it would make worse decisions. I realized that the strength of MCTS, going deeper in promising branches, made reasoning about it harder, because it would find a deep good solution (deliver a finished plate) and not find a shorter action plan (like making a very much needed croissant) that would be better in the long term. It might be possible to fix this by carefully tuning the exploration parameter and the evaluation function, but this seems just too complicated. So I decided to change the expansion criteria to simply the number of visits, to always explore in breadth instead of depth. Essentially this became a BFS search in the state space, implemented in a weird way :). This worked better, and importantly was easier to debug, because I knew all shorter solutions were considered before longer ones.

Regarding the partner, for a long time I just ignored them (though by nature I would happily prepare ingredients that they could use, as I did not focus on a single customer). For a long time I even did not consider that they can block my path, being optimistic that they would just move away in time. That seemed to perform better initially, but eventually I switched to considering that they are static for a few turns, then disappear (since their position is too uncertain after some time). In the last week-end, I added a hard-coded behaviour that they take things out of the oven if they are next to it and they put the ingredient in, which gave a nice improvement.

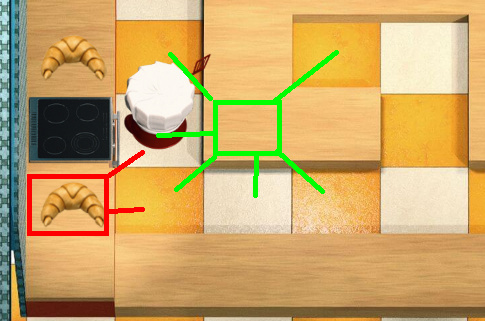

One small feature I like was to give a small positive value to spreading dough and strawberries at useful locations. That meant for instance than while waiting for my tart to cook here, I picked this dough and place it where it can later be used in the oven or the chopping board:

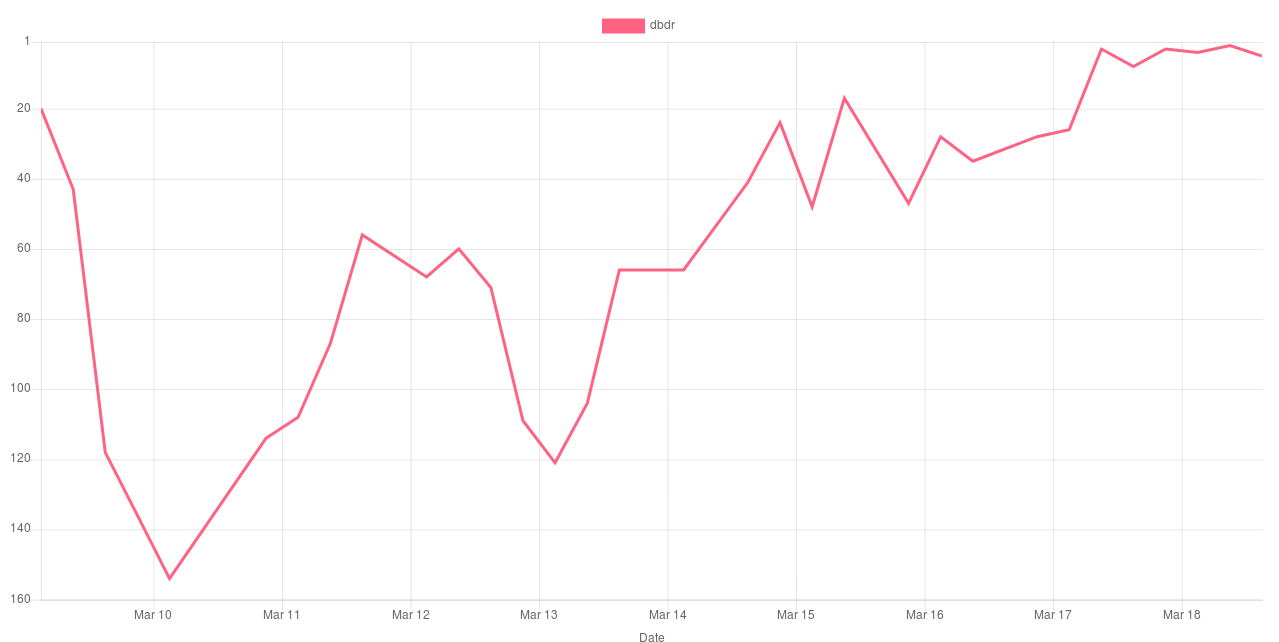

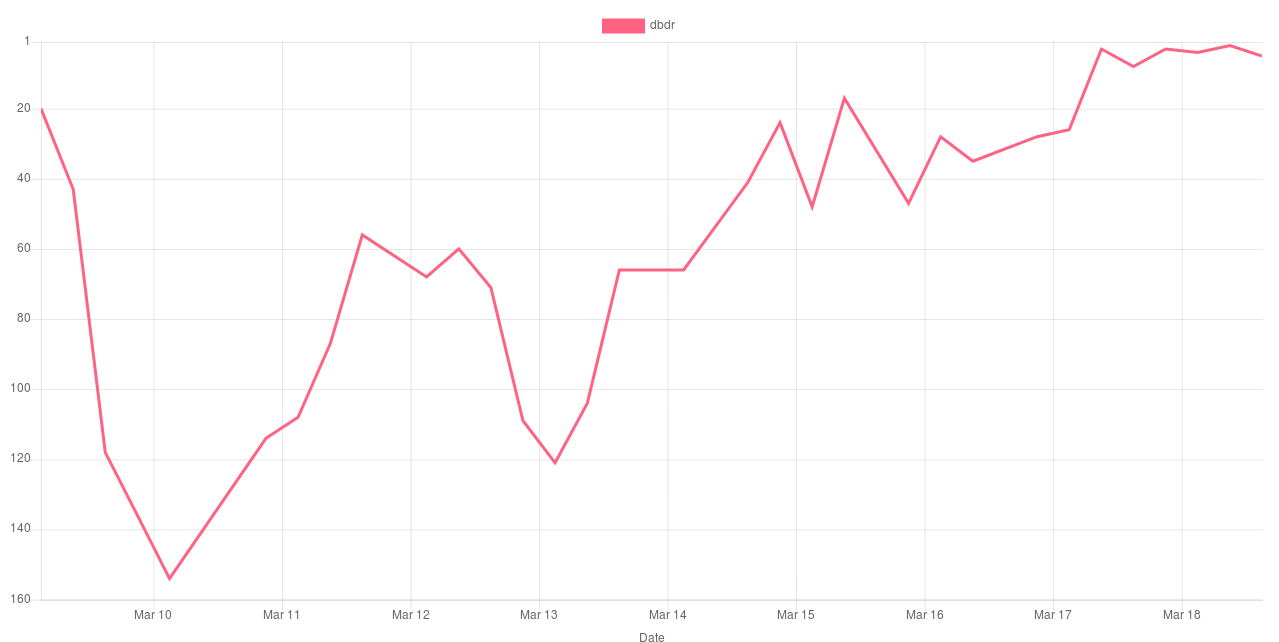

All in all, I think that the search approach indeed allowed me to keep trying new ideas until the end with manageable complexity, and that it payed off, even though it was obviously harder in the beginning. My rank graph from @Adrien’s awesome tool illustrates that:

When legend opened, I was very close but did not make it, so I had to spend Friday evening making sure I promote, which corresponds to the zigzag on March 15th :D. My final rank was #5, which is my best result so far!

Thanks a lot to @csj and @Matteh for a fun and well designed coop game, to the testers, and to all the chat regulars for an awesome community during and between the contests!

)

)

#TeamFire

#TeamFire