Thank you [LOCM]aCat, radekmie and CodinGame for these two contests.

RUSH

I loved this format.

[CG]Thibaud already explained the issues on the blog so I am sure we will have ever more fun in the future.

We had the referee which was helpful if you are familiar with the CodinGame SDK since you could make local simulations even when the platform was down, but I lost some time because pom.xml was missing, not sure if this was intentional.

During the draft I would PICK the first creature available, or PASS.

I did a Monte Carlo playing random moves (SUMMON, ATTACK) for myself only. I only handled GUARD and CHARGE.

My evaluation function was:

- INFINITY if opponent is dead

- sum of my minions attack

- sum of opponent minions attack

- opponent health

This code finished the RUSH in 8th position.

Everything below is related to the marathon.

REFEREE

Starting a new contest, I like to convert the Java referee to C++, including the initReferee() code.

Then I write a dummy bot that do random moves with a fixed seed and hashes the inputs.

Having done this, you can compare the results of a game in the CodinGame IDE and in the local simulation with the same referee seed to be sure you have a complete and bug free simulation.

I do theses tests every time I modify my bot, so I can be sure any modifications or optimizations I made did not break the simulation engine.

I keep everything in one big file, that will either compile as a referee, dummy bot or my arena bot with a bunch of #ifdefs.

SEARCH ALGORITHM

The referee included the computeLegalActions() function that was very helpful.

I did a Monte Carlo at depth 2:

- play random moves for myself until I PASS

- play several random responses for the opponent using the same approach

- keep the worst score after my opponent response

- stop as soon as it is worse than my best move so far (pruning)

EVAL

+/- INFINITY if a player is dead

+/- sum of player creatures scores

+/- CARDS_DRAWN_MALUS * cards drawn

+/- player health

CARDS_DRAWN_MALUS was to avoid attacking the player if it breaks a rune and allows him to draw an extra card.

My final push had a quite high value meaning I would not attack the player if I break a rune unless I deal a lot of damage.

For the creatures on board scoring, I used a multiplicative evaluation:

BASE_CREATURE_VALUE + CONSTANT * (keywords.hasLethal + pow(attack, A)) * (keywords.hasWard + pow(defense, B));

Where A < 1 and B < 1.

BASE_CREATURE_VALUE was to give a minimum score to a creature, even if it had no attack or poor value since it could be upgraded.

My idea with the multiplicative evaluation was to favor cards with balanced attack / defense. I would prefer a 3/3 creature over a 5/1 or 1/5 creature.

If it has lethal then defense is more important since you might kill several creatures with it.

If it has ward then attack is more important since you basically have a free attack.

All the other properties were ignored because I could not find any good valuation for them.

DRAFT

Since I could not find anything good (see below) I ended up looking for my opponents preferences in the arena.

Each card had a static score, with a 2 slot mana curve (small cards vs big cards).

After each PICK, the scoring was adjusted to avoid having only small or only big cards.

LOCAL SIMULATIONS

In order to adjust the parameters for my bot, and since I am not patient enough to wait for the IDE or the arena to complete, I like to rely on local simulations.

This can lead to inbreeding on some contest (where your bot is very good at beating himself, but poor in the arena) but in my personal experience it performed pretty well on this contest.

Since the game was pretty unbalanced, you had to run a lot of simulations to have a decent result (I did 1000 games as player 1 and 1000 games as player 2 against my best bot so far).

Basically it is cg-brutaltester but using GNU Parallel and my local referee.

JUST FOR FUN

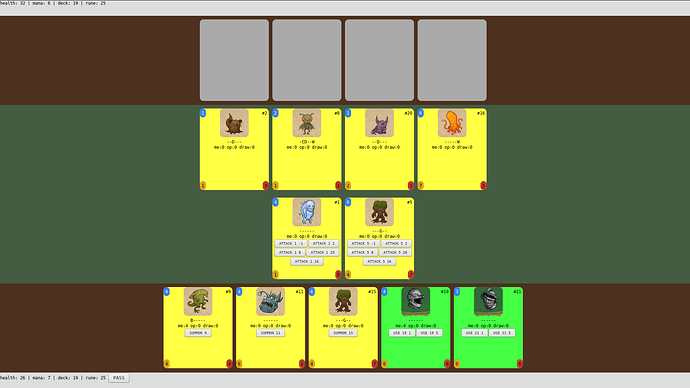

During week 2 or week 3, I did a small web interface to play against my bot.

It did not help but it was fun (unless you are player 2  ).

).

Basically it was a modified bot who was responding to AJAX requests to update the display or get human’s inputs.

WHAT DID NOT WORK FOR ME

- Mana Curve: in Hearthstone they always talk about this so I tried to have a balanced deck with cards for each turn. In the end I had a 2 slot mana curve with felt dumb but worked better for me.

- Before the Monte Carlo, I started with an iterative beam search depth 2 (doing plays for me and the opponent considering the N most promising moves). This performed worse than Monte Carlo, probably because I did not do the alpha/beta pruning correctly.

- Most played draft: doing local simulation where each bot picks random cards, and keep the most played cards. This ended up having mostly small cards because or early wins.

- Genetic draft: start with a pool of random scores for the cards, and after several simulations use a genetic algorithm to refine the scores. This was slow and the resulting deck was bad…

- Hand value: try to keep items in hand to avoid using them poorly. Unfortunately, my final bot would accept using “Decimate” (-99) on a 1/1 creature since if it would end up on a better board scoring.

CONCLUSION

The game was pretty unbalanced and had too much randomness and hidden information as reported by other players.

However ClosetAI was in 1st position for a lot of time until the end, so I guess we all had space to improve.

But I think 4 weeks was too long, I prefer the 10 days format, and it seems ClosetAI pretty much solved the game in the first week…