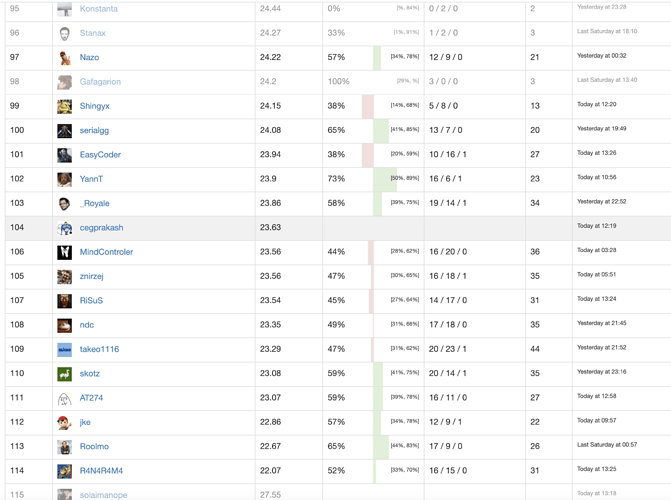

hey @pb4 thanks for your images. I feel my rank (100+) is not justified considering I had 55-60% win rate against rank 40-50 bots. Just because I had lesser lead in the lower bracket does not mean I shouldn’t be paired with higher ranked bots. My bot was fine tuned to beat better bots and not weaker bots. The initial calibration definitely favoured the rank 40-50 bots. If only they dare to fight my bot with some small probability we would know they are weaker than mine. It also gives the me a chance to climb back in case the calibration went bad.

1 Like