Finished 3rd !

Intro

Congratulations to everybody, espacially Saelyos, Romka and the CG team for hosting these events.

I don’t know if it’s the prize pool or the marketing or both, but seeing almost 5k contenders is amazing !

Keep it up CodinGame, your work is wonderfull.

I’m obviously very pleased of the outcome, this is my second contest podium after Code a la mode but this one was not pure heuristic so I feel the competition level was higher. This is my first PM, I usually don’t feel like sharing.

Journey

Got to bronze as fast as I could with python to get a feel of the game with the full rules.

I worked on the simulation the first weekend, I realized collision handling didn’t need to be perfect. Then I wondered what kind of algorithm could be good.

Tried with pure random search depth 10 with the simpliest eval and felt like the result was ok.

Didn’t feel like aggresive bot could be effective until last weekend when I saw some amazing tricks done by other bots.

I almost instantly felt like FOW mitigation would become necessary but this was intimidating so I started to work on that only last saturday.

My bot almost focus exclusively on farming.

Each feature was tested with CGBenchmark <3

Final bot

The main search algorithm is inspired by Magus Fantastic bit genetic algorithm.

The point was that I felt this could make Pacs cooperate as Wizards did without needing to tell them so.

A Particularity is that I randomize only on valid output for each pack, so I simulate each turn before randomizing the next.

Merge and mutate were done on a single whole Pac path.

I did manage 10 to 30k full 12 depths simulation per 50 ms.

For ennemy I run same algo for 10 ms before with my pacs standing by. I only simulate ennemy pacs if I’m sure of their position (line of sight or if the FOW mitigation tells me so).

The eval is the simpliest possible (myScore-OppScore) with huge bonus/malus in case the victory threshold is passed. I sum up eval each turn for the whole solution score so pacs focus on closest pellets.

At the end of simulation I minimize for each pac the distance to the closest pellet because I saw my pacs wondering endlessly at the end of games when no more pellets were close by (none in 12 depths).

The FOW mitigation system :

Each turn I compute all paths possible starting with symetry. I then remove some based succesively on :

- Line of sight (pacs, pellets present, pellets missing…)

- Score, is this path possible according to opponent score difference between this turn and the previous one ?

- I use observed collisions and deaths to trim paths

- reduce paths length themselve if all shares common prefix marking those cells as having no more pellets.

- only keep last cell if all paths score = 0

- deduplicate

- if numbers starts to grow to high, remove paths with less size (meaning opp pacs would stand still or move only 1 cell instead of 2 on speed), remove paths with less score, meaning opp pac would be dumb…

This way I could generally go through mid game.

Then when there are fewer pellets remaining paths number explodes, so I clear it and goes blind for this particular opp pac until I see it again.

I would use paths to : - pin point an opp pac if possible to use in sim and avoid certain death.

- reduce cells value (pellets score) by % of occurrences in paths to use in sim (ex: if 8 paths out of 10 has cell (x, y), going on it only yield 0.2 score).

- try to avoid ennemy embush

I have a turn 0 brute force to exclude all actions which could lead in immediate death, this works defensively.

I also added in the last night a quick trapping system which might detect a trapped opponent situation taking advantage of the FOW mitigation, it doesn’t occures very often.

Misc

I’m very impressed by the bots which pacs cooperate to trap !

Mine is only a farming machine, I realized only at the end that killing was an option and the fact that two different approaches could both yield very good results makes me think that this contest is a success. Surely future multi top bots will be a combination of the two.

Was hopping to win the contest when I saw a 10% overall win rate improvement after implementing a new feature when I was already top 3 on the leaderboard yesterday evening !

Looking forward for the next contest, hopefully with even more contenders and nice prize pool so other top coders join us !

…I didn’t aware Golden boss have such kindness.

…I didn’t aware Golden boss have such kindness.

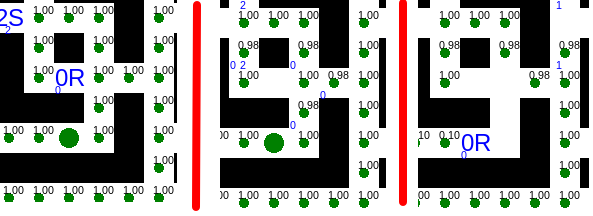

) who printed out their next move in the game as well, so I would run games between myself and these players and then see how often the coordinates I printed out matched theirs in the replay. Here is an example:

) who printed out their next move in the game as well, so I would run games between myself and these players and then see how often the coordinates I printed out matched theirs in the replay. Here is an example:  . I’m glad I persevered, this tracker was certainly the only strength of my bot

. I’m glad I persevered, this tracker was certainly the only strength of my bot