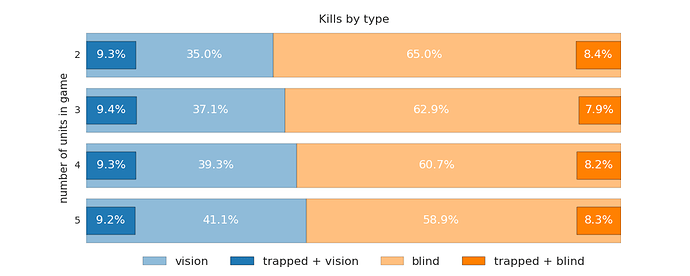

I got interested in seeing how much randomness is introduced to the games through accidental kills. So I downloaded replays from gold and legend leagues and I looked at the circumstances of each kill. When none of the players had vision of the other, I assumed a kill was blind, that is rather accidental than planned. I also counted separately kills due to entrapment, that is those that happened in a dead end tunnel.

In the

gold league about 60% of the kills were blind. With a very small difference in

trap kills when enemy was visible. On maps with more units, the

blind kills happened slightly less frequently, probably due to more map parts being visible.

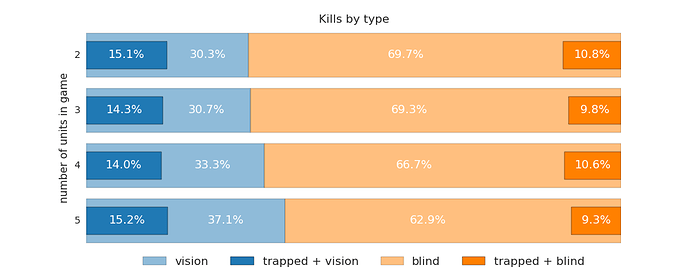

In the

legend league, there were more

blind kills that in gold, and the number of units on the map made even larger difference. Also less kills happened

in the open, especially with

vision (+50% trap kills vs. gold). Probably due to many bots either chasing enemies or opportunistically killing units in the dead ends.

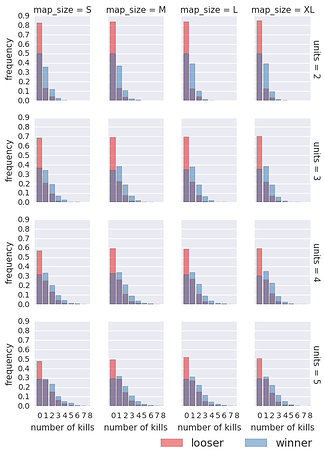

But do these kills help win games? It’s likely complicated, as it probably depends on the timing of the kills (e.g. early vs. late game. I ignored such nuances and just looked at the distribution of the total number of kills performed by winners and loosers. I did it separately for 4 categories of map sizes (based on the map area), and unit numbers.

In the

gold league (

left), the map size didn’t matter much. Loosers finished ~40% more games

without kills, and when they did kill, they did it in

smaller numbers. Especially in games with

2 units, a single kill seemed to

lead to a win. With more units, the difference was smaller. For 5 units,

2 kills where needed to make a difference. Still, not killing resulted in

more losses.

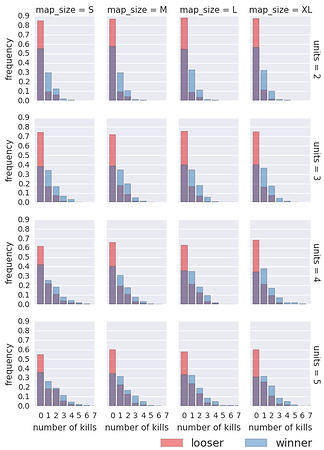

In the legend league (right), it was pretty much the same, with slightly less kills overall, especially on the smallest maps with more than 4 units. Probably again, due to better vision there, and improved dead avoidance compared to gold.