Legend 4th.

【Before the Legend League is released】

[Algorithms]

- 6 days Chokudai Search (It is a variant of Beam Search)

- Opponent is WAIT

- 1 Depth = 1 action

- In order to make the progress of the day uniform between different nodes of the same depth, limit the number of actions per day to 6 times, and when WAIT, jump to the next mod 6 == 0 depth.

I banned how to plant trees in which my trees are in shadow of each other. (When calculating shadows, considered all the trees as GrowState3.) However, if there is no place to put it with this rule, up to two trees are allowed to be planted in the shadow.

In order to reduce the number of states, each cell I limited the seed source tree to one most highest tree.

[Evaluation function]

- Maximize actual score

- Then, maximize evaluation of trees (1,2,4,8 for each GrowState) for tie break.

With this evaluation, immediately COMPLETE all trees in order to maximize the score, But that isn’t good. So I put a limit of COMPLETE that only after the 12th day, and once in a day (if did not COMPLETE, it will be carried over to the next day).

By the above method, I was in 20th place just before the Legend League was opened.

【After the Legend League is released】

Below, to prevent confusion, I will describe my bot as Player1 and the opponent’s as Player2.

I realized that it is important to maximize the amount of SunPoints earned, not the number of trees. However, it varies depending on the opponent moves, so it is necessary to do opponent move prediction. I implemented it in the following way:

- Do Player2 perspective ChokudaiSearch (Player1 is WAIT) in the first 20ms.

- Next, do Player1 perspective search while playing back the actions of the Player2.

The problem with this method is the Player2’s invalid seed action. When simulating Player1, Player2 only executes a predetermined action, so Player2 may plant the seed later where Player1 already planted the seed.

To solve this, I allowed two trees in one cell. There is also a solution to cancel the action of Player2, but I did not adopt it because doing so would lead to overvaluation of the move that disturb opponent.

Also when simulating Player1, it’s not good to try to plant seeds in an empty cell that Player2 COMPLETEed in the simulation. If Player2 does not COMPLETE in a actual game, Player1 will be waiting until Player2 do COMPLETE action.

To avoid this, I introduce the concept of GhostTree.

GhostTree is the tree that visible only by Player1. It appears when Player2 executes COMPLETE, and it does not exist for Player2 and does not produce SunPoints, but for Player1 it exists as a tree of GrowState 3 and make shadows, and cannot plant a seed there. As a result, I can lead the Player1’s action that does not collapse even if Player2 does not do COMPLETE in the actual game.

[Evaluation function]

By released the opponent from WAIT, now I can use symmetrical evaluation values.

- When simulating for Player1, evaluation = [Player1 evaluation value]-[Player2 evaluation value]

- When simulating for Player2, evaluation = [Player2 evaluation value] (Player1 is WAIT, so disturbing to Player1 is not evaluated)

Second version of evaluation function is follows

- Maximize actual score

- Then, maximize total SunPoints Income for tie break.

The total SunPoints Income is the cumulative total of SunPoints gained during the simulation + the SunPoints to be gain the next day (calculate in the current game state). The used SunPoint is not considered.

The COMPLETE limitation remains the same, only once a day after the 12th day.

With the contents so far, it was ranked 10th in the just after the opening of Legend League.

【To the Contest finished】

It is not optimal that “COMPLETE once a day after the 12th day”, I improved this first. I removed this limitation and changed the evaluation function as follows

- Maximize A*[Actual Score]+B*[Average SunPoint Income]

Average SunPoint Income is simply the Total SunPoint Income divided by the number of days.

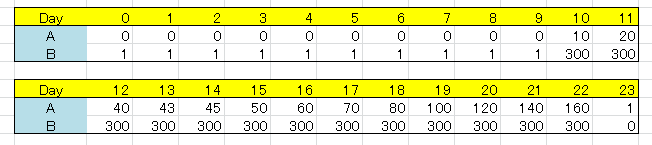

Coefficients A and B are different parameters for each day (24 days x 2 for a total of 48)

This was adjusted as shown in the table below

As a result, the behavior was as follows.

- Do not COMPLETE until day 10

- On days 10-11, COMPLETE trees that makes very small SunPoint Income.

- For days 12 to 15, COMPLETE trees that makes small ~ medium SunPoint Income.

- After 16 days, COMPLETE trees without almost considering SunPoint Income.

In addition, I did the following improvements.

- Subtract the wasted SunPoint from the SunPoint Income. “wasted” means the minus amount due to the number of trees when growing a tree.

- Removing same game state by using Zobrist Hashing. Along with this, the depth of Chokudai Search has been increased to 8 days.

- Allow the case where Player1 will plant a seed later at the cell where Player2 already planted during the simulation. This is because predicting the seed coordinates of the opponent player is mostly fail.

- Only on the 12th day, allow seeds to be planted in the central green cell even if it is in the shadow of my own tree. If it is plant on the 12th day, I guess that we will be able to COMPLETE it before the end of the game.

After doing these improvements, I finally got the result of 4th place.

Thank you for this great contest, I’m also looking forward to the next one.

). Not so secret, but I’d tell you there are 81 squares in UTTT and each square can hold about 30 different states (one-hot encoding). I also exploit the fact that during move only handful of squares are changed.

). Not so secret, but I’d tell you there are 81 squares in UTTT and each square can hold about 30 different states (one-hot encoding). I also exploit the fact that during move only handful of squares are changed. Or maybe its totally a different encoding

Or maybe its totally a different encoding