Rank: 698/1608 Gold (925/6867 Global)

Language: Java (~1700 LOC)

Strategy: MCTS (simulteneous), tree reuse, pruning, eval

Time Spent: 50~60h (much of it debugging)

Overview

I’ve competed on CodingGame before but this was the first time I really went for it. I’m happy that I implemented a simultaneous MCTS with storing the state between turns and managed to code up most of what I intended. However, due to poor performance of my sims, taking too much time to calculate possible actions and memory issues my code couldn’t really make good use of tree search.

Overall despite the struggles I had a blast, learned a lot and I’m sure to come back for future contests.

Initial Strategy

I went for simulating the game pretty early. My initial strategy was, starting with the current board state, to go through each possible action and (as many times as possible) play through the game from that action using random moves. After move time runs out I pick the action with the highest winrate. Code snippet:

List<ActionWithScore> possibleActionsWithScore = board.getPossibleActionsWithScore;

while (hasTimeRemaining()) {

for (ActionWithScore actionWithScore: actionsWithScore) {

actionWithScore.playThroughAndUpdateScore();

}

}

// Sort by win rate

Collections.sort(possibleActionsWithScore);

return possibleActionsWithScore.get(0).getAction();

After ironing out my game simulation bugs I was surprised this got me as far as ~700 gold on gold opening. At best I was running ~2k sims a turn and unfortunately this number didn’t really improve after many tweaks due to performance issues.

I was certain the initial strategy was suboptimal because of purely random move choice so I wanted to try MCTS next.

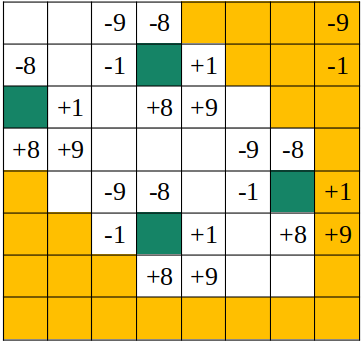

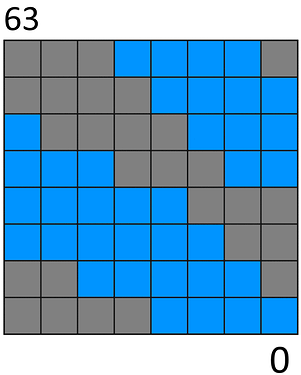

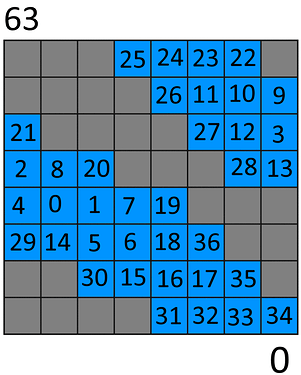

Board Representation

I stored most of the information about the board as int[37] where each int holds all the information of the cell. Later, I found out about bitboarding and the possibility to store the entire board in just few ints, and even compute the shadows with bit shifts with a hex bitboard. I chose not to pursue this representation because at all times my main bottleneck appeared to be generating the list of possible moves at each state. In hindsight, implementing the leaner bitboard board representation would’ve allowed me to more easily compute shadows and do more interesting things with pruning the possible actions using heuristics.

MCTS (simultaneous)

This was my first time implementing any game tree search algorithm (haven’t even done minimax before) so it was definitely a struggle. My initial MCTS used alternating nodes for me and opponent until I realized this gives opponent nodes information about what action I had taken.

I re-wrote the MCTS to be simultenous by storing both actions in a node, and chosing actions independently for each player based on the UCB. I believe this is MCTS DUCT although I found about the paper later and haven’t read it carefuly.

I also implemented storing the tree between moves and changing the new root to the current node whenever it existed (i.e. my opponent move was one of the expanded children of the prior root). I thought this was really cool but it didn’t end up mattering much due to general performance issues with my code.

Pruning

For pruning the possible moves I’ve used fairly conservative rules and probably should’ve spend more time to make pruning more aggressive:

- Seed: use preferred seeding indices (where the seeding tree won’t shadow itself) in the first 18 days. Also in the first 14 days don’t seed at all in a cell that would shadow with any of my trees (assume tree size 3 for the seeded cell to make this easy)

- Grow: don’t grow beyond 5 trees of the next size

- Complete: don’t complete in the first 11 days. Also don’t complete if I have less than 3 trees of size 3 and less than 5 trees of size 2 in the first 20 days (to avoid sun starving).

Scoring

I spend a lot of time tuning my scoring function. My intuition is that if the scoring function is a perfect predictor of the end game score from that state (assuming optimal moves) then you’ve basically solved the game. This is also why a NN might be a good fit (it just has to map a board state to score).

My scoring as usual was fairly complex. It took into account current scores, nutrients, sun, current day, the number of trees of each size and the number of directions from which those trees can be shadowed (i.e. across all the 6 directions). After tuning it in the web IDE it looked to be a pretty decent predictor of who’s winning.

Lessons Learned

What went well

- Unit testing and ensuring that simulating the game works correctly

- Asserts (pre-submission) catching most of my bugs

- Scoring function seemed to work well

- Created a Timer utility class which was handy in diagnosing issues and could easily by turned off

What went poorly

- Code bloat and trying to code in 1 file for the first few days (after which I split up code files and started a python script to stitch)

- A lot of time diagnosing random timeout spikes which turned out to be GC

- Expensive MCTS Node representations (containing a map, multiple lists and few other variables each)

Where I got lucky

- Adding a line to access all my static fields (to force my static initializer blocks to run) and adding a System.gc() at the start of main solved most of my timeout issues

PS (edit after reading posts above): Thanks for the great PMs, you guys are truly inspiring and I’ll revisit the strategies without the time pressure over the next few months. Also thanks to game makers and GC for hosting; this was a great game/competition!

, your minimum padding of 3 avoids some masks compared to my minimum padding of 2.

, your minimum padding of 3 avoids some masks compared to my minimum padding of 2.