115th Legend

TL;DR:

- a pleasant contest overall, I would find it great to have them more often

- interesting game, even though there was overpowered wind stacking

- please, fix the submits and/or the ranking system, even more, if you plan to have heuristic-friendly games more often.

Feedback

My goal for that contest was to pass legend and be in the top 100. When I first saw the game rules, the goal became pass legend OR be in the top 100. I’m really bad at heuristic-friendly games  (by that I mean games where usual search algorithms are not overpowered).

(by that I mean games where usual search algorithms are not overpowered).

At first, I was disappointed that the game was a recycled one with bugs, overpowered wind stacking, and missing/wrong info in the statements. It could be due to my increased expectations after waiting for one year or because I was frustrated to have to deal with my weakness in this long-awaited contest. Even a recycled game is nice to have for a contest and the staff addressed the bugs. Overpowered wind stacking… even though I believe this should not exist, it did not bother me as I did not plan to become a top player in this contest, I ignored them. The wild mana win criteria seemed off to me. As that information was unknown to the players, I thought it forced us to attack the opponent, as players’ HP were known.

Making a contest on some more heuristic-friendly games is not a bad idea. However, this requires a much faster submission. Indeed, we cannot test locally how well our bot performs, so we have to rely on the arena. However, submits took hours to complete, making testing ideas quite hard. Decreasing the number of matches per submits is not the solution: the ranking system is already unstable, even more with a heuristic-friendly game, the situation would be worse with fewer matches to measure a bot performance.

There are many ideas I have not tested, as I knew I would not have time to check if they improved my bot strength. It’s frustrating, but not that much as I was not competing for the top places. Other people may have a different perspective though, as this is a recurring issue for years, and to my knowledge, they are no communication about addressing that particular issue.

Strategy

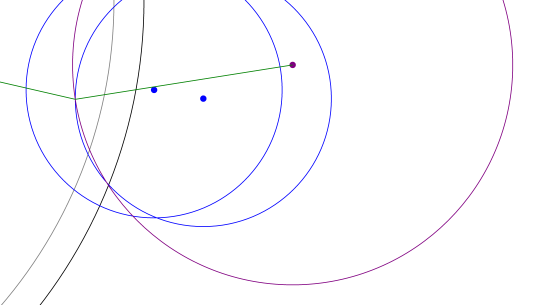

I won’t speak much about my strategy, same kind of heuristics other people used, nothing relevant here. Two defenders, keeping an eye on a possible single attacker, and an attacker, tasked with finding nice mobs to send to the enemy base. Heroes were only allowed to use winds, as I never managed to handle controls and shield in a way that actually improved my win rates.

Methodology

What I would like to share is my plan to pass legend. I was in the gold league with my bronze bot. So I was quite far from the gold boss with a really dumb bot. On my computer, I made a bot version that defends only, another one that defends and farms wild mana with three heroes, another one that uses a hero as a dumb attacker, and two variations of the latest on the defense tactics. For clarity’s sake, we could name those bots DEFEND, FARM, MIXED1, MIXED2, and MIXED3. None of those bots were clearly better than others when tested locally. The code was a mess, adding improvements led to highly variable win rates, I was unsure of what worked and what wasn’t. Here is the method I chose:

- find a way to structure the code

- write a 2 defender - 1 idle bot, and measure its strength against the five previous versions

- once the bot clearly defeats the DEFEND version (so could defend better with only two bots) and has more than 20% win rate against the three mixed versions (resist correctly to a dumb attack with only two heroes to farm/defend), go to the next step

- implement an actual attacker, test one idea at a time, until the win rates are all above 90% vs DEFEND, FARM, MIXED1, MIXED2, and MIXED3

It was hard at the beginning because I could clearly see that the biggest issue with my arena version was the attack. However, I thought it would be hard to see any improvement with a good attack if I had a weak defense. To this date, I did not implement all the tricks I had in mind for the attack, but they were not necessary to pass legend.

For fun, I decided to submit a borked version of my attacker, as I got some high win rates against all my previous versions. That version passed legend, to my great amusement. I then fixed the attacker on Sunday, as I was too ashamed of watching my bot replays.

Code Structuring

About the code structuring, I finally found something that works best for me. I have a function for defense and one for the attack. Both return the remaining mana, such that I could make sure that I won’t be short when casting winds. The defense function is called first and deals with the last two heroes. That way I prioritize defense when spending mana. Then the attack function is called, with the remaining mana as a parameter, and deals with the first hero.

In each function, I start by collecting data that matters for the decision to take:

- where are the opponents

- where are the mobs this turn, next turns, in how many turns they’ll leave the map or reach a base

- where are my available heroes for the task, filtering out the controlled ones.

Next, I analyze the data to assign a goal to each hero. For example, in defense, I could task a hero with guarding the base, remaining inside it, because there is an attacker nearby. In that case, the other hero would be free to farm if no threat is found or remove the attacker if it becomes too dangerous for mobs I see coming.

Finally, for each hero, I search a way to best implements their goal. For guarding, that would be staying in the base while following the attacker, such that I could intercept mobs sent to my base.

This organization may sound trivial for some, useless for others. However, this organization helped me to overcome the code mess I usually produce when working with heuristics. It was also easier to tune the goal assignment by adding new considerations to the analysis, or improving their implementation (e.g. MC simulations for farming).

Even longer version: my retrospective article

It was very fun and I greatly appreciate CG for hosting it and I hope you keep doing a couple of this a year.

It was very fun and I greatly appreciate CG for hosting it and I hope you keep doing a couple of this a year. (by that I mean games where usual search algorithms are not overpowered).

(by that I mean games where usual search algorithms are not overpowered).

I checked for it so I’m not sure how I missed it, I thought wind didn’t affect mobs outside the map

I checked for it so I’m not sure how I missed it, I thought wind didn’t affect mobs outside the map