Euler, congrats, you did a great job in this contest ! I found it interesting but didn’t quite understand your move search. Can you provide an example of how the matrix and algo worked ?

Thanks !

Euler, congrats, you did a great job in this contest ! I found it interesting but didn’t quite understand your move search. Can you provide an example of how the matrix and algo worked ?

Thanks !

I’m not euler, but I found this one (https://www.geeksforgeeks.org/hungarian-algorithm-assignment-problem-set-1-introduction/).

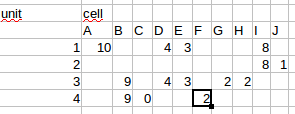

Let’s take the following board as an example:

We see some units (1-4) and cells (A-K).

Both unit 3 and 4 would like to move to cell B. So we could send unit 3 there and let unit 4 wait.

The alternative is to make unit 4 take cell B and 3 waiting or going up to D. Let’s assume that D is the closest to the opponent, so moving unit 3 upwards and letting 4 take the empty cell would be the best.

So we assign a score for each unit in combination with each cell:

Empty cells are 0, left out for readability.

As the Hungarian method requires a square matrix, we have to add additional rows (units) to it.

These are just 0s everywhere (no score for doing anything).

Then we can just feed it into our algorithm, that we copied from github

This requires O(n^3) time and gives us one optimal assignment for units to cells.

Note: most implementations use a cost matrix rather than score, so we have to multiply every value by -1.

In my example I used the same score for any unit occupying a cell. You can vary the function, e.g. prioritizing high level units at the front over low level ones or not giving a reward for a lvl3 unit entering a neutral cell.

Edit: the forum was lagging, I didn’t even see that @_CG_SaiksyApo replied before me

Here is what I have in my logs for this turret :

his cut gain=28 cost=20 move unit 9 to 5:4, build 5:3, build 6:3

This cut would have lost me two units.

The turrets I build does not necessarily prevent the enemy to make lethal, sometimes it’s just to prevent a regular cut.

Okay thanks for your explanations Neumann, your strategy is very clear!

I finished 17th Legend. I didn’t have as much time/energy as I did with previous contests, so I am very pleased with this result. I only made it into legend on the last night, after midnight. I never cut it this close before. The gold boss was so hard that I went all the way to the top half of legend as soon as I got in.

My code is even worse of a mess than it normally is, because of the time pressure. But amidst the 2.4k lines of duplicate code, bad naming and bugs, there were some good ideas:

Opening

I used the first 1000 ms to run a beamsearch for the first 12 turns (or less, it ends after half the map is taken). This beamsearch maximizes tiles owned and minimizes the distance to enemy HQ. This sort of has the effect of the bot trying to get as far out as possible. This gave me a very effective early game in terms of income. I always got the maximum income possible I believe (with a beamwidth of 5000 states), but it sometimes gave me a messy, hard to defend territory. If I had done it on a turn-by-turn basis instead of all in the first turn, I would have been able to respond to the opponent decisions and try to use voronoi to better control the map.

Chain Finish

I calculate the finish using a kind of Dijkstra (I think?). I start at enemy HQ and I move outward like a BFS and add up the required costs to get to each square. Every time I hit a square that has been visited at an equal or lower cost already, I break off that part of the search. At some point I hit my own territory and I record the cost to get there. I will keep expanding and hit other parts of my territory until it is no longer possible to get a lower “best” cost. Then I will have found one possible best path. The tricky part is taking into account enemy towers. Because I move in reverse I will not incur the 30 gold cost for a level 3 unit if I go onto an enemy tower, because that would mean I destroyed it already when reversing the path and it would not have the movement penalty after passing the tower. This is not true when multiple towers are near, so each tile also keeps track of the number of tower effects on it. You also need to be careful when hitting your own territory. I calculate 0 cost if there is a unit on there with sufficient level (so the first action would be a 0-cost move when reversing the path). It is a bit complicated but super efficient.

Cuts

This was hard for me to come up with. In the end I think I found a nice balance between computation and effectiveness. My code was unoptimized and uses a 2D array of Tile objects, so keep this in mind. The steps are as follows:

I make a list of all my tiles that border neutral or enemy territory. I don’t consider walls here.

I make a list of all opponent tiles that border any territory that is not owned by the opponent, including walls. In this case “border” also means diagonally, because cuts can work over diagonals.

I use a very similar algorithm as I explained above for the chain finish, except now it is from every start tile as described in (1) to every end tile as described in (2), so something like 15 times 30 start/end combo’s. Also, I don’t just get a cheapest path. I get ALL cheapest paths. So I allow two different paths with the same cost from A to B, but not a more expensive path from A to B if there is a cheaper one available. There are many instances where the long cuts are the best, but few where a more expensive cut from A to B is better than a cheap cut from A to B. I felt this was a good way to remove a ton of branching.

This gave me an opportunity to make extremely long cuts if I wanted to. I had no “depth” limit. Well… in fact I limited it to 200 gold because in rare instances, gold boss would save 300g and time me out while trying to calculate his cuts. I did this, of course, to be able to put towers in the paths of these cuts. To calculate the worth of the cut I did floodfills for both sides to see what the change in value is for the connected territory. This scorefunction was very rudimentary and could have been much improved.

Limits of this cutting strategy:

a more expensive path between A and B may cause more damage. I don’t consider this. This strategy could be made to take these paths better into account by also allowing an extra 10 cost to get to an already visited tile (or 20 or more). The larger this number is, the more you will branch. There are probably many possible tricks to use to make this better.

I didn’t have time to check for the cost reduction you can use when moving from your own territory to your own territory. You can take out a corner of the path and “move diagonally” in this case. This can lower the cut-cost by 10,20 or even 30g.

When you move from your own territory to your own territory, you can use a wall-connected area as a cutconnection between the two parts of the path that connect to your territory. There are some instances where this would work (with holes in the map). You cut from two places at once, ending on the wall area, potentially doing more damage at a lower cost. I didn’t have time to try something with this.

I did many other things in my code, but I don’t think any of them are worth mentioning. Most of it is common sense and a lot of it required more refinement.

Thanks to the creators for another fine CG contest. I had fun!

Hello everyone, thank you all for participating !  I have not seen all top AI yet, but most of them are really nice, and thank for your post mortem !

I have not seen all top AI yet, but most of them are really nice, and thank for your post mortem !

I finished 51st (7th in gold), could not be above the gold boss. I went with Python, and by trying to rush, I quickly had an ugly code… I did so many BFS (that were not optimized) that I sometimes had time-out (between 40ms and 55ms in “stress situations”). This could be reduced to 10ms by changing the BFS implementation.

If it can helps some of you : profile your code to find bottleneck. It appeared in my code that lambda function were costly, and hash collisions too (I used set() in my BFS). Replacing the set() by a list of bool improved by a lot your code speed in Python.

Anyway, nothing fancy in my AI : I move toward enemy HQ, and at each frame I find the best cut I can do, then all my cells the opponent can achieve with TRAIN, and based on this last criteria, I place towers to protect myself from cuts.

To find the “best” cut, I do a BFS from all cells of my border (being the first not-owned cells adjacent to an owned cell), and find the minimum cost to train to any cells. Then I test all possible cuts I can afford, and take the one with the best score. I changed multiple time the scoring, and nothing worked too much for me, but I tried to maximise the number of lost cells for the opponent while not losing too much money or income.

Thanks again everyone for your participation, thank you CG and all the testers !

Hello all !

thx for the contest, really fun ! and thx for all the messages and explanations in the forum.

I have a question for the admin though:

would it be possible to have the game still up and running so we can still play it ? just for the fun of optimizing our code.

Thx !

Very nice contest.

Rules are simples and not too looooong to read

I didn’t do much.

Bronze with a Manhattan.

Silver with a BFS.

I finished number 2 in a Code of Ice and Fire (which puts me 2 for 2 at being 2). Thanks to @Azkellas and @Tehelka for a great game, and for everyone at CG for hosting such great contests. Congratulations to @reCurse, the worthy winner.

The game was interesting. Two aspects combined to make searching a nightmare:

That meant that a pile of heuristics seemed likely to be the way to go.

I had some concerns with the game at various points. There was a very strong early tower meta, which I worried made higher level units pretty pointless. At various times, the game seemed rather prone to large swings, but high level games mostly got over that. The one remaining problem is mines, which simply seem too weak - but overall, the game is pretty well balanced and has a lot of interesting aspects.

Here’s a sketch of the interesting bits of my algorithm.

We detect cuts for the two players in the same way; I’ll assume we are looking for our cuts against the enemy when describing the algorithm. The algorithm finds a single, best cut.

This has the advantage of finding almost all good cuts, while considering relatively few cuts, which is useful because we do this many times each turn. However, it misses a fair few good ones - when there’s a good cut to a square, but also a cheaper path to it that is a bad cut.

To defend an enemy cut, we do the first of these that works:

If I had more time, I would have:

Of course  We hope the game to open for multiplayer around next week, after we fix some “details”

We hope the game to open for multiplayer around next week, after we fix some “details”

Good !! super happy to see that

Loved the contest. My best rank was #2 in Gold

I used Simulated annealing at depth = 1 (just a dummy enemy). My dummy never builds or trains, only moves.

I had two different scoring functions (1 for enemy and 1 for me). Both are very similar except enemy doesn’t have a score for towers and mines.

My Eval:

I simulated around 1000-3000 options during every turn to decide the best combination of moves.

My first 6 of those simulations are finding a combination of moves followed by the best training chain. (These simulations never build towers). The moves are also picked in careful order with a global priority. Say in first simulation all the units try to kill opponent troops, then capture opponents’ cell, then capture neutral cells and if none of them is possible move to my own cell. In the other 5 simulations, I choose a different order of movements)

The rest of the simulations are random with proper pruning.

All my unit movements are completely random followed by either building towers or trainings. The probability of building towers before training is 1/2 and after training is done is 1/2. Probability of training T1, T2 and T3 troops next is 8/10, 1/5 and 1/10 for 100 iterations if I have gold)

Train T1 Troops only on neutral positions or enemy captured positions.

After the first 10 turns, the location chosen must be the closest to enemy HQ. (same as gold boss).

Train T2 Troops only on top of T1 troops and if I initially had >= 30 gold.

Train T3 Troops only on towers or tower affected areas or T2 troops if I initially had >= 40 gold

Build towers with a minimum gap of 2 with each other. Initially place towers defensively (2 distance from opponents). Later based on who is leading the game, choose to build towers at 1 distance from enemy (if leading) or 2 distance from enemy (if losing).

There’s a maximum threshold for each of my units which also depends on the number of neutral cells on the first turn of the game. If all the cells are neutral then my threshold numbers are 21 T1 troops, 3 T2 Troops and 6T3 Troops and 7 towers.

Overall the contest was very addicting. I did not sleep in the last 48 hours trying to get to Legend. Nevertheless, this is one of the best rankings I have had in the community contest. So I’m happy. Thank you contest creators.

My first contest in CG, with a decent 190th in Gold, submitting code in the last hour.

Also as my first coding competition, it really inspired productivity, and wrote over 1500 lines in Javascript, and testing the code countlessly in the game IDE

After getting into silver on the 2nd-3rd day I was working on rewriting the game simulation environment with proper objects and methods and wrote a bunch of feature extraction until 8th day. At that time started writing a game logic based on heuristic, observations and other comments on the forum and chat,

mainly ending up with a common solution :

Could not finish up tweaking the heuristic game logic , as there are a set of ideal actions that can be described, and the part of my code with building towers did not work yet. Sometimes the code randomly broke, and repeating the game the malfunction didn`t appear. I assume it was a Javascript timeout.

My game logic was based on the feature maps like:

Ideal aim for heuristic logic was:

My experience on this contest, that I have spent too much time thinking and working out different feature detections in the allotted time, which I have not used in the end that much at all.

All in all I have enjoyed the contest. Thanks

Rank 1! It’s been two years (already?) since I last won an official contest here, so I am obviously really happy. It was a tough competition, kudos to the other challengers for a fierce battle, you did great!

I have been on the fence on whether I like the design or not for most of the competition. The foundations are solid and gave me a good vibe at the start, but the rise and complete domination of the chaining mechanic seemed to overshadow the entire game. In the end though, I think I expected a very different game to develop and was disappointed when it turned out to be something else. After playing more with it, some more subtleties started to emerge and made me appreciate its rich depth.

Nonetheless, I still have a slight dislike of how the game state is too brittle and volatile, making it prone to a large swing of momentum that is very hard to recover from if done properly. Figuring out a move is almost like walking on eggshells, navigating a minefield of shallow traps. The meta heavily favors playing as defensively and greedily as possible to finish on one single game ending move. Not the most exciting kind of game to play or watch, but still.

Yet it is also a coding competition after all, so game design is not everything. And on the coding part, it hit very high marks. I am pleased to see the variety of ways the problem was tackled, namely on dealing with chains and choosing moves, which gave way to a lot of creativity there, if less so in actual game strategy. The branching factor was huge, which allowed both search and search-less bots to thrive, and optimization was not the absolute end-all be-all. It is also impressive to see 3 of the top 4 bots were coded in garbage collected languages!

So overall, this contest was really well done. I could comment more on design stuff I wish the game did differently, but it’s pointless. I would even recommend against making any changes when the multi comes out, as the past has shown the level of activity drops too sharply post contest. Those changes would hurt more than help, as untouched contest bots are less viable out of the box, which still form the majority of the multi leaderboard.

The statement screamed bitmaps (or bitfields) at me on the first read. Small grid, lots of floodfill, neighborhood and surface checks… So the first thing I coded is a Bitmap class to handle all those operations, and my game state therefore contains one bitmap for each feature: walkable, mineable, active, owner (per player), units (per level), towers and mines. Combining them gave all the information needed for very fast simulation of game actions, which I did next.

The rest was pretty much run-of-the-mill implementation, and trying to minimize computing the activation map which dominated the rest.

From now on, what I will refer to as a plan contains all the actions (move, train, etc.) consecutively taken during a single turn.

To start, actions must be generated from the current state. This list is comprised of:

I purposefully exclude training level 2 and 3 units that are not part of a chain. I believe there is no situation under which it is a good action, because aggressive upkeep costs are crippling the most precious game resource: gold income. A decisive chain however does not make it an issue, as the game should be largely won by that point.

A simple hill climbing algorithm then picks the action from the list resulting in the best evaluation score. A new state is computed as a result of that action, new actions are generated from it and the search continues, until no action further improving the score can be found.

I tried different approaches with various degrees of pruning techniques and results (e.g. depth-first search, breadth-first search…). Ultimately I settled on a compromise in the form of a best-first Dijkstra search performed on every valid starting position, using gold spent as the cost function. Those were either units that have not moved yet, or cells on the front line that can be directly trained on. It would then search nearby cells that were not already owned, until it ran out of money or space to explore.

It is important to note the actions making the chain were not simulated for performance reasons. And for the same reason, a different and faster evaluation function was used on every step to keep the best chain found. It was primarily based on lethality, the number of new active cells resulting from the chain, its gold cost, and an additional penalty for using level 3 units.

A further improvement that came later on was to keep the X best chains found instead of just one, to make up for the weaknesses of the light evaluation function. The real best one can then be figured out with the normal, heavier evaluation function, along with the other actions evaluated in the root search.

It is just as important, perhaps even more so, to anticipate the opponent moves following yours, especially if it would be a massive blunder. I originally tried to run a chain search for the opponent and executing it in the state given to the evaluation function, but it proved to be too expensive due to the massive number of calls, causing blind spots in crucial moments due to aborted searches.

What helped a lot more was to decouple computing the opponent response from the plan search, composition and evaluation step described above. So at first, a whole plan is formulated as if the opponent would not do anything, and scored as such.

Then, once a plan is completed, a chain search for the opponent is performed on the resulting state. The resulting opponent plan is added to a memory of opponent responses, and the entire plan search algorithm restarts from the beginning, with a key difference. On every evaluation, it will execute all of the stored opponent responses on the state being evaluated, score each of the resulting states and keep the lowest one, behaving similarly to a minimax search.

This process will repeat itself until it runs out of time, or until the expected opponent plan already exists the memory. In practice, this rarely exceeds 5 or 10 different responses, which keeps it fast enough. The best plan according to opponent responses is then sent to the game.

The result was a massive improvement in strength. It also allowed me later on to just run the exact same, full plan search for the opponent instead of a single chain search, once the planning got good enough.

This remained the weakest part of my bot for a long while, as I spent most efforts on the search described above, along with optimizing and lots of bug fixing. I was convinced I could just iron out this part later, as it was more important to have those other solid foundations first. I think it paid off tremendously, as a good evaluation on a broken search is so much worse than the other way around. It is also a lot easier to tweak towards the end of the competition, as compared to doing major algorithm work.

The main features of my evaluation function:

I also eventually doubled the weight on gold and income past an arbitrary turn number. This resulted in very valuable behavior changes. It stopped overspending and overdefending in the mid game, instead building up income and gold reserves to prepare attacking. This also favored very aggressive chains that would cripple the opponent income.

Having a heavily optimized simulation and bot also paid off tremendously in the form of local testing. I was able to self-play a little under 1000 games per minute, which was a great way of aggressively pruning bad changes and spotting potentially good ones.

The viewer was a bit lackluster in usability this time around. The frames were sometimes confusing in the execution and change of player turn, and matching it up with the error output was even more of a pain than usual. The lack of a debug view also doesn’t help readability, especially since level 1 and level 2 units were hard to distinguish IMO. I ended up coding my own viewer to help with those problems (e.g. big circle with 1, 2, 3 inside).

Thanks to @Azkellas, @Tehelka and Codingame for the amazing hard work put in making this contest a reality. Thanks to everyone for participating and making this a fun community event!

Hi everybody,

I just discovered Codingame a month ago. I was really amazed with the platform and its community.This was my first contest, it was very interesting and I learned a lot in the process.

I managed to implement several functions, calling they in this order:

I applied variations of this functions depending of three stages of the game with their own goal:

a. conquer more territory

b. build a defense with towers

c. explore the rest of the map

I wonder if, in addition to path searching, some graph algorithms could be used to find the better spots to cut off the enemy.

Thanks a lot to @Azkellas, @Tehelka and other collaborators of this game. It was a great work!

Also thank to all the players that shared their insights and strategies.

Thanks to @Azkellas, @Tehelka, it is a great contest and I really like it.

Since I have some other stuffs to work on, my goal is simply to get to gold before the contest started.

With the limit of time I had, I decided that I won’t write a simulator anyhow.

So I write a flood-fill without taking action order into consideration.

And that flood-fill algorithm just teleport me from wood 3 to bronze…

Add the logic to detect when to use level 2 and level 3 units and get to silver.

While I was in Silver, I think it should be the time when I need to implement the cutting logic.

So I spent some days doing other stuff while thinking how to implement that without a simulator.

I ended up with a 24 cuts search, 12 rows and 12 columns.

Search if I can occupy a row or a column and calculate the enemy territory to the left and to the right (or up - down).

This worked dramatically and get me to gold.

Rank 254 overall.

Very satisfied with the result and didn’t touch the code again.

10th final, best result so far

i really liked this contest, chains were interesting from the beginning, and even though tower defense seemed too strong (no upkeep cost and survive cut), the game still had enough other details/concepts to kept us busy (performance optimization was not crucial).

also, it was easy to improve incrementally (or fix bugs). as without the need of heavy background calculations or multi-turn plan ahead, inefficiencies can be easily spotted. and without randomness those can be reproduced in ide/local.

implementing a proper set of early heuritics kept me in top3-10 first week. but as usual, those run out of steam. at least this time i was able to implement some more sophisticated versions and after fixing all bugs in those i got back to top10.

competition was strong (eg. halite top players participation), congratulation for winners + early top: Euler and Siman.

early heuristics (order of appearance)

greedy move/train lvl1

killing blow

heuristic tower defense

train lvl2 + 2step cut to kill oppnent lvl2

hardcoded first turn movements

order of actions

-killchain

-moves

-opponent killchain

-cuts

-simple tower defense

-train

-mine (single one)

first turns moves

-still greedy

-focus on going toward opponent base

-avoid situations where a unit cannot take an empty cell

-tried different greedy methods for maps with huge holes, without success

-should have added random/simulate-ahead method

killchain

-DFS from opponent base

-post adjustment to compensate tower kill

moves/train

-greedy with score calculation

-avoid stronger opponent units

-check cut value with DFS from opponent base

-no need to stay on tower defended cells

-avoid being easily cut (leave undefended cell next to opponent unit)

-avoid many “backfiller” units

-prefer move toward opponent base/unit

cuts

-BFS from edge points

-only through not-actively-owned cells

-check cut value with DFS from opponent base

-only check cut value if it really cuts (check on nearby cells)

-steplimit to avoid timeout (9-7 somewhat dynamic)

cuts order

-run a few rounds for self and for opponent

-with various gain rate, and minimum gain parameters

Legend 6th.

Really good contest. I like this game and I also like visual design of this contest so much, it is so cool.

Simulation

I used Simulated Annealing. I simulated only my moves, no opponent moving simulation.

I divided my turn into two phases: MOVEs, then TRAINs and BUILDs. First, simulate the MOVEs in 10ms, then the TRAINs and BUILDs in remaining time. These are using same evaluation function.

In the MOVE simulation, I annealed movement order and moving directions of units.

In the TRAIN and BUILD simulation, I annealed units train position and its level, and tower construction positions. No mines I used.

I did not optimized the annealing method a lot. I spent a lot of time to improve the evaluation function.

Evaluation

In the beginning of the game, evaluate that securing a lot of areas is the most important task, and if it is same evaluate that the distance to opponent HQ is small. In the later half, saving gold is more important than securing areas because I wanted to do a powerful attack that reaches the opponent HQ.

Also, I calculated the max range in which opponent player can train units. We can calculate this by doing Dijkstra Search from opponent HQ. (In Dijkstra Search, if in opponent area moving cost as zero, if not, moving cost as the minimum cost to training unit on that cell). My own cells in this range are considered to be occupied by the opponent player on the next turn, so decrease the evaluation value. As a result, I got the result of building the tower so that the range attacked by the opponent would be small.

Thank you for the explanation euler, very clear !