The topic where you can tell how you liked the challenge, & how you solved it.

This was my first CG contest and I quite enjoyed it, especially the very helpful chat #general_de. Shout outs to @eulerscheZahl, @schubc @DerRadikaleRusse, @mmmd and others!

I used C because that’s what I’m most comfortable with, but I probably should have used something that puts less stones in my way. I had many ideas which I wasn’t able to implement because of my lack of knowledge of AI porgramming and all the troubles I had to solve before even thinking about strategies.

The website on hexagonal grids was a great ressource and the linekd sites including path-finding were a great read.

I ended up submitting a version with MOVE commands and a simple chain of if() trying to achieve some form of intelligence, even though I spent two days of re-writing it all to have some breadth-first-search to steer and avoid mines which kind-of worked out but I did not manage to get enough complexity into a “scoring” evaluation to come even close to the version with simple if() and MOVE commands.

==> overall a great learning experience and it’s been a long time since I wrote and re-wrote that much code from scratch in such a small timeframe. Loved it, will play again!

BlitzProg, 292nd

Well, unlike the last time, I’m quite satisfied about this one given the very short time I could invest (like 6-7 hours total). Work, headache, cold, stress, busy week, and spending the week end away from home on a little computer.

Wood3 to wood2 by changing the default behavior (go to center) to “go to the last element of the list”

Wood2 to bottom-bronze by making sure it’s rhum, and shooting at the last enemy of the list once every 2 turn.

I didn’t do anything for almost the entire rest of the week because stress and things I mentioned above. Then presidential elections results came in, felt a relief and decided to keep going during the last 10 hours.

I switched over to PHP because C++ was a too big risk for me, and wrote a pseudo-simulator that could simulate about 1k per turn (not everything is accurate and I had to change a few things to stay effective). PHP is slow

Then, monte-carlo to search a fast way to reach the closest rhum barrels. Along with a slightly more elaborate way of firing, so I anticipate my target’s speed and fire when my research yield a command that does nothing, so I replace it with fire. my AI quickly became much smarter, and I skyrocketed all the way to top silver.

A few bugfixes and further improvements to avoid firing during a cooldown obliterated the silverboss, so I went to bed. Woke up 292nd overall.

Still a bit disappointed that was a such busy week. Because I only realized now how much room I had for improvement

I’ve finished ranked 151st.

I went up to silver league by using this single algorithm:

if "ennemy ship within 5 hex" :

"shoot a cannonball on it as if it is sailing at with the same speed and

same direction during the time the cannonball should arrive"

else

if "there's still some barrels":

go to the closest rum barrel (using MOVE command)

else

go to the closest opponent (using MOVE command)

endif

endif

To go further I used a tree system to simulate the next three move combinations and an evaluation function to select the best combination. The fact the ships move were independently evaluated was not very efficient on multi ship maps but it did the trick to reach my final rank.

As a side note, this was my second challenge and for this one I tried to do some Groovy… and I lost a lot of time because of performances, my code was always on timeout. So, thanks to this article I managed to reduce Groovy overhead and to get rid of these performance issues and to finally submit my code to reach the gold league.

To sum up, this was an interesting exercise for me to discover how groovy is running compared to java and to work on hex grids computations.

Many thanks again for this great challenge.

82nd.

I used a Monte Carlo algorithm for this game:

simulate a sequence of random moves, calculate the damage you take, the barrels you pick up and so on. Repeat this with several sequences and rate them according to their outcome. I added bonus points for collected rum, low distance to the next barrel and speed of my ship (a standing ship is easier to hit). A penalty is given when reaching the edge of the map, as you have to turn around and are a simple target at that moment. This scoring helps you to avoid mines and cannonballs without explicitly implementing it.

My simulation was quite incomplete (e.g. if two ships want to enter the same field, I actually moved the first one).

This was all done without shooting or placing mines so far.

Then I tried to replace WAIT by MINE or SHOOT:

you can calculate all possible paths of your enemies and calculate the damage for each of them. When you are able to hit a field in such a way, that all the paths with minimal damage are covered, it is a secure hit.

If no such shoot can be made, check if your enemy is following you and would reach the field where you place your mine when using WAIT. These hidden mines are quite effective, as they are hard to avoid (I added a danger level for potential mine positions and gave a penalty in my scoring function when reaching these fields).

If this doesn’t apply as well, try to shoot a mine, that damages most of the potential enemy paths or shoot at barrels close to your opponent.

Some more tiny features I added:

sacrifice one of your ships to collect the rum reward with another ship, help that one to stay alive longer

estimate the distance of two fields in the matter of turns it takes for a ship by precalculating it on an empty map

simulate your opponent first, try to react to it, hopefully avoiding to get blocked

try different parameters. Run many matches with the brutal tester, while varying them (this can be done by another program).

38th. I took the base GA from my CSB solution and reused that. It would generate a solution of 3 turns for up to 3 ships. I’m not sure whether it was the low number of simulated turns or a bug in my simulation, but generating more than 3 moves ahead worked very poorly for me. The GA would choose one of 5 moves (FASTER,SLOWER,STARBOARD,PORT,WAIT). Any FASTER/SLOWER at max/min speed would be replaced with a wait. The final position was then evaluated with scoring for total health, distance to nearest rum and penalties for being too close to another of my ships / being at the edge. If there weren’t any barrels left I’d try to minimise the distance to the enemy if I had less rum, or maximise the distance (with large penalties for being near the edge) if I had more or the same rum. Getting shot / hitting a mine had a very large negative penalty (this was to fix some losses where I’d go through a mine to grab a barrel, but something would happen on the way).

Originally any waits were replaced with FIRE at the enemies estimated position (based on moving them directly forwards at current speed until the ball would arrive at the same time as them).

This was enough to get me around top 10 gold (best was 0.5 below boss in 2nd), and I wasted a lot of time trying to tweak this method enough to get into legend.

On Sunday evening I decided to I needed a much bigger change, and very quickly wrote a system to get better targets. This worked by calculating every possible position of the enemy for 3 turns and adding a count to that cell. Targets were then calculated based on the value of the cell (a higher value meant more moves would leave an enemy there). Any move that got rum would add 20 to the value instead of 1, and moves that hit cannonballs/mines would not count at all.

Even with that change I only beat the gold boss by 0.01 score, pretty much overtaking him on my last game. This code then was basically unchanged by the end (I added the ability to drop a mine if shooting was on cooldown and the enemy could make it into the hex behind me in 3 turns, but I haven’t seen a replay where this actually worked).

Looks like finish in 16 position if nothing will changed.

Move strategy: until there is barrels on field, collect them. For collecting I use Simulate Annealing with some evaluations, like position closest to barrel, amount of rum and so on.

My simulation was not calculate near mine damage and some another things and probably have some bugs.

If move strategy return me WAIT, I start checking, can I shoot, or place mine. Decision to where to shoot or place mine based mostly on “if”.

Mine placed if I have big chances to hit enemy ship. Mostly it is case when ship have speed, and will not reduce it by SLOWER in next turn.

When all barrels collected, my movement strategy turns into aggressive, or survival mode, depends on who have more rum at this moment, me, or enemy.

If enemy mode enabled - I become follow enemy ship with maximum amount of rum, trying have position with minimum of Distance(ship, enemyShip).

Again, if movement return WAIT, I shoot. If distance between me and enemy less then 3, I shoot.

I shoot with some prediction, where enemy ship will be in next 1-3 rounds.

To avoid collisions between my ships, I make known commands list, to use them for next ship decision.

If I’m in survival mode, I just try to be as far from another ships (even mine) and mines as possible.

It works really great, a lot of 1x1 ship games I win, even with strong players. 2x2 and 3x3 becomes much worse, because my ships doesn’t share information about where they fire, that can help to reduce enemy ship escape possibilities.

Also I haven’t time to implement sacrifice strategy and decide not to do it to be not broke my current results. Especially I was thinking that this “dirty hack strategy” will be changed somehow, since it was so unnatural.

Also was not so enough time to implement “delay barrel take”, “fire to enemy barrel”, “reduce enemy escape options” and so on.

Summary:

++ two weekends included - great contest length, but still not many as I prefer. Quick and dirty code makes me sad.

+++ Open referee

— Referee with bugs and not so good code on my mind. Since I program in Go, I port it and decide port it not “AS IS”, but with some optimizations and better code organization (on my mind of course :)). And later changes in Referee was not so trivial to merge in my referee.

Suggestions:

Additionally to Referee was nice to have test cases, like: game.IN, game.OUT.1, game.OUT.2… At least it help a lot to someone who port referee not “AS IS”.

4th - Legend

Thank you CodinGame for this great contest again!

Probably one of the contests where I invested the most time, and ended up with the biggest code

Basically I used the same approach as Ghost in the Cell.

The first step was to convert the Java referee to C++.

In order to make things more convenient, I had one big source code, that would compile as my AI, a referee or a testing mode, with some #ifdefs.

In referee mode, the program takes 2 bots as parameters and outputs the winner. I usually ran about 1000 games with a 2ms timeout, to quickly see if my new bot improved over the previous one.

In testing mode, I ran the referee with 2 deterministic dummy bots on a specific map (using a static seed) and compared the game states with the same match I ran before on CodinGame.

This allowed me to check that some optimizations I made to updateGame() did not break the simulation model.

My bot was a Genetic Algorithm doing 3 consecutive moves for each ship in 3v3, 4 moves in 2v2, 5 moves in 1v1.

In order to choose moves for the opponent, I ran the GA for the opponent against myself assuming I would WAIT, for 1/3 of the time limit.

Then I ran the GA for myself against the bests moves found for the opponent for 2/3 of the time limit.

Of course this meant I was very good at predicting my own moves, and I was afraid this would cause too much “inbreeding” but it turned out to be okay, and at least better than assuming my opponent would just WAIT.

I optimized a bit the simulations by using lookup tables for mines, barrels and ships collisions to avoid iterations on the full list at each turn.

The moves (for myself and opponent) were choosen based on the genes, with some preference for FASTER, PORT, STARBOARD and FIRE.

I would never WAIT (but I could FIRE/MINE during my cooldowns resulting in the same behavior).

For the FIRE command the target was chosen based on the genes, with some preference for ship positions (including mine).

After each turn of the simulation, a big evaluation function was called to see if the new state was better (with a decay factor of ~0.8 for each turn in the future).

Some parameters I was checking were:

- my ships health, speed, cooldowns and distance to the center of the map

- opponent ships health

- a additional malus for ships on the border of the map

- ships distance to barrels * rum

- distance to future cannonballs

- bows distance to opponent ships sterns

- when there were no barrels left: distance to ship with highest health (being offensive if I am behind, or running away if I am ahead)

I also tried many things that did not work (or that I did wrong):

- try to guess were opponent would be in the future in order to shoot at these coordinates

- instead of the distance, use the “time to reach” by precomputing all the paths before the GA

- have a malus for all the potential coordinates on the map where the opponent could FIRE or MINE

- and many others that I forgot

During the last day I did not manage to improve the core functionalities, so I spent some time tuning my evalution function weights.

Overall this was a great contest, it was so fun fighting against pb4 and Agade all week long and improving my bot!

I was 107th overall, 4th in Python3/Python.

This is mostly written as advice to other Python programmers (obviously not the ones who beat me—I’d be really interested to know how other Python bots handled or avoided the heavy computations.)

Genetic Algorithm

I also implemented a genetic algorithm. Before, you dismiss that as too hard, the idea is quite simple (also see Magus’s Coders Strike Back Genetic Algorithm Tutorial.) :

- Make, say, 5 lists of 5 moves each (from WAIT, FASTER, SLOWER, PORT, STARBOARD) representing the next 5 moves

- One or two manually computed strategies (for example, code a quick and dirty navigation to the nearest barrel, ignoring mines and cannonballs)

- (After first turn) The best list of moves from your previous GA results (with the first move chopped of)

- The remaining moves are random

- Simulate those move lists for 5 turns

- This wasn’t easy, but any good strategy involves simulation.

- See my notes on using NumPy to speed up this part

- Score the runs using an evaluation function

- Start with a simple evaluation function, like the net change in rum over the simulation, and then get more nuanced as your strategy improves.

- Choose the best (fittest) of your 5 trials and randomly select modifications to those to simulate.

- Repeat 2–5 until your run out of time (give yourself a heavy buffer, like 10 or 15 ms.

If my GA returned WAIT (or SLOWER/FASTER when my speed was 0/2) then I fired a cannonball or laid a mine. I found this worked really well at avoiding cannonball and mine damage and collecting barrels. This basic approach got me to gold. I spent the remaining time playing around with my evaluation function and general strategy. I unfortunately, couldn’t get to legend this time.

NumPy (made my Python simulation much faster)

I knew from my Coders Strike Back genetic algorithm that pure Python was going to be slow. So instead, I used NumPy to do the simulation part inside Python. The idea is that NumPy does parallel vector calculations fast. For example, I had NumPy arrays ship_q, ship_r, d_ship_q, d_ship_r, and speed, each of dimensions

(number of trials in a batch) x (number of ships on the playing field)

Here _q and _r refer to the axial coordinate system. My simulation included code such as

ship_q[speed > 0] += d_ship_q[speed > 0] ship_r[speed > 0] += d_ship_r[speed > 0]

I was usually able to simulate 10-15 batches per turn (where a batch was 5 trials of 5-turns each simulating every ship on the board simultaneously including all explosions, collisions, etc). I was surprised at how readable and natural the NumPy code was (at least before some optimizations). It wasn’t all fun and games (pun?) however. It took some work to handle collisions since I had to compare all ships to all other ships.

Import precomputed structures with pickle > zlib > b64:

I don’t know if this ultimately helped me, but I precomputed a table of fastest routes to get to any point starting at a certain speed and orientation. Computing the table took a long time (especially when coded inefficiently  ), so I computed it on my laptop and then used these three modules/functions to convert the table to plain text which code be pasted into my CodinGame code:

), so I computed it on my laptop and then used these three modules/functions to convert the table to plain text which code be pasted into my CodinGame code:

-

pickle.dumps- Encode any Python structure into a bytes object -

zlib.compress- Compress bytes object to a smaller bytes object -

b64.b64encode- Convert bytes object to plain text (this is 2-3x shorter than the string representation of the bytes object)

Once pasted in my .py file, I decoded with

pickle.loads(zlib.decompress(b64.b64decode(string_repr_of_object)))

Of course, to avoid running up against the character limitation of CodinGames, I found it helpful to not encode the table itself, but a more compact representation of it, for example a list of key-value pairs (or better yet a NumPy array) which can then be converted back into a table. (Actually, I did a number of other things to shrink this table. I took symmetries into account and only stored next moves.)

Summary

This was a hard puzzle and I was surprised how much strategy mattered. It doesn’t matter if you take more barrels faster, unless you get the last barrel, or can trap and destroy the opponent, or sacrifice your own ships, etc. It was especially complicated with the fact that a dead ship produces a barrel. I think due to the heavy computations (to avoid cannonballs if nothing else) one would have trouble getting first place without using C/C++. However, Python + NumPy did respectably, and I feel my not getting to legend was more due to a lack of good end game strategy than a lack of speed.

Feedback about the challenge

Pros:

-The referee code was super useful. This is a must have from now on. There are so many little things that the only way to have it right is by having it.

-Fast matches, 1v1 symmetric that are the most fair.

-50ms is good for fast matches…

-2 full weeks are nice for many people.

Cons:

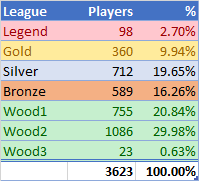

-Wood leagues have more than 50% of players.

I think this is frustrating for these players. The real challenge should start at bronze and not wood. I now there was the twitch video, but is not heavily promoted. So to avoid player frustation, reaching bronze should be easier.

-Crazy hard legend bot, only 13 players passed at first batch, so it was like a legend 15th bot. This makes a lot of good players can’t pass from gold, when they deserve it. This was another BIG point of frustation, for me it’s a no-no.

-Ended on monday at like 3-5am in America, it’s a bad time.

-Bad timeouts at sunday? Suddenly my bot started losing a lot due to timeouts, I changed nothing.

EDIT: -Being 335th/3624 on this challenge gives more points that being 1st/1669 on a low played challenge like Codebusters. Now tell me that being 1st on CB is easier than 335th on Pirates. This is a BIG problem for correct rankings. Scoring isn’t fair for low played challenges.

About my bot

Final rank 40th legend.

I worked on this bot from monday to sunday, about 60hrs of work and 2200+ lines of code (I’m a bad, slow coder).

Most of the time was for polishing the simulator. Even with the referee code, converting it to another language isn’t trivial. Java manages List of objects as references, whereas a C++ vector is a hard copy. This makes me waste 1 day on searching why collisions got on an endless loop. Another thing was the difference between double values on both languages. The function angle() gives different results on C++, that leads to a different moveTo() outcome.

I precalculated a lot of things on first turn, mainly distance() and angle(), that are the most expensive. Both were a 2D array, like dist[pointA][pointB]. I coded coords to index with (((y+2)<<5)+x), the +2 was to avoid out of bounds on the array due to negative positions on bow() or stern() I have a bigger array than the map due to the index wasting space with the <<5. I also coded neighbours on an array, neigh[point][orientation].

Once I had a simulator I started testing it with my own bot as enemy. As I know both movements, I can apply them to both simulations and the resulting GameState update must match with the next turn on the game. I had problem with that due to mines, because they aren’t seen at more than dist = 5 (another day wasted).

All this took me +40hrs unfortunately, so I needed to rush the AI.

My AI is an almost pure Genetic Algorithm (GA) for move actions, WAIT and MINE with this genoma:

class Genoma { //Only myShipCount used for GA, not always MAX_SHIPS. MAX_DEPTH was 5 Action action[MAX_SHIPS][MAX_DEPTH];

, with FIRE being used manually when I have a WAIT as an action for one ship. Monte Carlo also worked fine, but on my final submit it was a GA.

WAIT is like free time to do shootings, so I manually wrote some basic targeting heuristics to shoot at future position of the enemy, I first tried to replicate the moveTo actions for enemies, guessing they’ll target the nearest rum barrel. But it didn’t work very well with speed=2 ships, so in that case I try to shoot a barrels near the enemy and farther from my ships. A late addition was the manual sacrifice of ships, ships drop rum when killed (up to 30) so I checked if I was losing (less health than the enemy), in that case the ship with the least rum shoot itself, and mark its id as sacrificied. This way I don’t do double shooting or anything.

About the fitness function, I have two stages, with or without barrels. With barrels I priorize speed, health and rum captures, and penalize distance to nearest rum barrel. That makes ships goes naturally to the nearest barrel. I also gives bonus to my ship with the most rum. For the mine placement I didn’t have time to do something good so I just penalize a lot if the ship has mineCooldown, that makes ships only place mine if they think it will hit an enemy. For enemy ships I penalize this health.

Once there are no barrels I keep all the above but added more scores. I go to cat & mouse game, If I’m winning OR the total health of my ships are greater than enemy’s total health, I run away. I score positively speed, distance between enemy ships and my ships, and penalize distance between my ships, That makes my ships tries to stay near, but away from the enemy. Then I have the right conditions to sacrifice the ship with least life, and buff the bigger.

If I’m losing, then I penalize distance between enemy ships and mine, just that.

I have many flaws, specially with FIRE, I target so badly that I even hit myself. I don’t focus on fire a single enemy too.

I don’t trap ships, bad mines, etc etc.

But I’m happy with the results, the GA made some nice moves. My whole AI doesn’t have a real pathfinding because map is simple, so it’s done by the GA. And that means they can run into a mine, or take hits if with that the AI thinks it’s a better move.

If you see from frame 142 in that replay https://www.codingame.com/replay/212958220 you’ll see how one of my ships just go for one mine to die. That’s not intended by me or hardcoded, but the GA thinks it’s better to “sacrifice” a ship and buff the biggest ship. The other ship at turn 156 did the same, but this was enough to win the game.

And my ships also got hit on purpose to die (again without a manual sacrifice), like on this https://www.codingame.com/replay/212958005 turns 172/174.

GA (or even Monte Carlo) can do nice stuff, stupid moves that ends well. This is hardly done with pure heuristics, because it’s not easy to do. But you need an almost perfect simulator, or things can go terribly wrong.

I don’t simulate offline at all, neither do fine tuning of coefficients. All was done on Notepad++, and testing only on CG IDE and sometimes CG spunk.

I recommend new players they learn about Monte Carlo and Genetic Algorithms, they are really interesting search algorithms. Codingame is about to release a new learning platform, and I’m pretty sure there will be a lot of nice courses about these subjects. E.g. a fast hands-on for Monte Carlo I wrote: https://tech.io/playgrounds/4003e618997c561e705af1ade9cfca4b432/monte-carlo-method-in-7-minutes (beta version, can change in the future). Stay tuned

60th in legend.

Before the contest started my ambition was to make it to legend in the laziest way possible. Early in the contest I made the decision to use heuristics + some BFS instead of going through the hassle of making a simulator. In hindsight that was wrong, and I should have gone for the simulator path.

Heuristics easily got me to gold, but it seemed almost impossible to get past the gold boss. A major problem with heuristics is that you easily end up with tons of if/else statements that in the end become a monster to tune. First when I systematically went through all the different weights and cases I got something that made it to legend, late at Sunday night.

Basic algorithm when there are still barrels left:

- calculate distances (in nr of moves) from barrels to all ships (also enemies) using a BFS search that avoids mines

- assign each ship a barrel using a matching algorithm

- for each ship: try each action (left, right, wait, slower, faster) and check collisions with mines/cannonballs/other ships (done individually, which is sometimes results in a completely different outcome than in the engine), also from there look ahead 1 move to check for unavoidable collisions with mines and cannonballs.

- wait to take a barrel if we are closest and the resulting ship rum would be >100. In only did this when there were not many barrels left, had no time to experiment.

- fire at barrels that are closest to the enemy, or at the enemy itself

This was a really interesting game with surprising depth. As always the graphics looked great, although the ships looked more like fishes to me:). I had a lot of fun, thanks a lot to the organizers!

103rd, 1st in Scala

Thx CG, I had a great time working on this game. I want to also thx my wife for her support.

I worked more than 30 hours on this game.

This is the second time I developed an AI with the CodinGame-Scala-Kit and this time I integrated JMH for micro benchmarking.

Functional Reactive Bot Programming

In a functional way, this is the hard constraint I made on my coding style. Both the local arena and player logic is developed with immutable data structures and function composition.

Performance

To optimize the simulation performance, I used JMH for micro-benchmarking (example here) and VisualVM for profiling. Everytime I manage to reduce the simulation latency, I climb in the ranking without modifying any game logic. That’s magic! It seems that the entire job is to optimize!.

Meta-Heuristic

What?! You are talking about Scala Performance before those C++ guys. Fortunately, the game is not all about performance. For those who want to learn to Meta-heuristic, I highly recommend the book Essentials of Metaheuristics. I used an Evolutional algo from this book MuPlusLambda.

For the quality function, I want to give credit to @Manwe who shared a hint on the ship-to-barrel distance function during Murex’s CoderHub. Instead of taking into account only the physical distance, he advised including the orientation and speed factors. This is really helpful. I also met @Agade in the Hub and I appreciate a lot his great tips. Join a Hub if you can next time. In Paris, you can find us in Murex.

Streaming

During the contest, I had the chance to be hosted by CodinGame for a one-hour streaming (video here). I would make it again and make it better. If you want to discuss Functional Reactive Bot programming in Scala, please join me next time.

Thanks for reading.

TrueLaurel

Nice game, I was really attracted to it. But lack of good knowledge and experience moved me far away from first hundreds :).

One thing I’d like to mention is - IMHO it’s not correct to provide source codes.

Yes, that way someone can notice bugs and they will be fixed.

But at the same time I think game is more challenging if you have it like a black box and have to find out some subtle details\circumstances by yourself experimenting.

Just my thoughts, keep on great work!

Ended up on the 25th place.

Naive bot

Since I knew I will not have much time for this contest, I decided to kick-start the competition with the Python bot to get early into the league which had the complete rule set.

I implemented following naive heuristic AI:

- if there’s enemy ship within 5 cells from where I am, extrapolate her trajectory and shoot there

- if there’s no enemy around, move to the closest barrel

- else pursue closest enemy ship

I did not even bother working in hex coordinates, used only Cartesian.

Cannon cooldown was resolved by firing only at odd turns.

That was enough to get me to the Bronze League.

Simulator

Then I started writing the game simulator; and having the referee source code was super helpful (but one should have watched for bugfixes there).

Porting the referee from Java to C++ was a bit painful and I second @anon41962965 that the object design could have been better (especially the collision part which is overly complicated).

Scoring function

My scoring function appeared very simple yet super efficient, I came up with it very early and most of the consecutive improvements to it were rather non-improving

So I was maximizing the difference between my and enemy’s score, which was comprised of:

- big bonus for every ship alive

- small bonus for total health across all friendly ships

- a bonus for total speed

- a penalty for bigger distance to the closest barrel

- a penalty for relative angle to the closest barrel

- big penalty for being stuck at the border of the map

Lastly, when all the barrels were collected, like most of the people here I penalized for staying closer to an enemy when I was winning, and vice-versa.

Search

Initially I wrote DFS with the depth of 2 moves (for worst case of 3x3 battle) - that made me competing on par with the middle of the Bronze. Then I started predicting enemy moves applying my naive AI during the simulation and implemented beam search, which propelled me into the top 50 and I never left it since then.

Smart things

…did not work well for me. I tried different advanced tactics, but they at best kept me with the same ranking:

- forcing close combat when ships stuck

- firing at mines that obstruct navigation

- firing at the last barrel when it’s reachable by the enemy

- sacrificing own ship to gain extra health

Probably I did something very wrong.

Performance

Even though I was writing full-fledged OOP with polymorhpic types, dynamic memory and standard library, performance was not an issue. With the use of optimizer #pragmas I’ve been able to simulate about 14k moves per turn which in my opinion was more than enough for such a dynamic game.

Tools

I relied heavily on my unit tests (30 in total, covered mostly the scoring function and the search), which helped a lot to avoid regression.

I used git flow to experiment with improvements on dedicated branches and discard them freely if they did not bring desired result.

CG Spunk was my friend to estimate new bot versus real players before doing timely submission.

Finally I used own-crafted small Python script to compose all the source & header files into single submission unit.

Small feedback before a more appropriate post-mortem :

I disagree with this comment by @anon41962965 . The referee was super useful to understand the intricacies of movement, and easily readable.

Sure, the data structures are not appropriate for high-performance simulations, but :

- The referee code has absolutely no performance requirements, it’s only run once per turn

- In the case of this contest, fast data structures would make for less readable code

- This leaves some margin of improvement for the players who wish to implement a higher performance code.

I only have positive feedback with regards to how the rules & referee were handled during this contest. Thank you

Okey, explain a little bit more my point.

Of course it was useful, and it was nice to have it. And it is not 100% evil, it just can be better.

Read it was not so easy, also as port it, in rules it was explanations, how turn processed, step by step. But when I read a code, I saw that on some steps there is performed calculations for further steps. Like in “moveShips” it was storing some information for rotate step. Since when collisions appears, ship move was reverted back, also as this “rotate” information probably must be changed. So, it looks like there is no rotation step performed yet, but already some logic involved. And it is lovely place for bugs.

This came me to thinking, that right idea was not try to understand how it works, but just port it “as is” and consider wrong work of this code as feature, but not a bug. Like latest discovered for me - I can’t place mine directly to another ship. What is an explanations for this? I don’t check it in details, but I’m fear, that such “mine” plant ignored by referee, but “mine cooldown” was changed in this case.

The question is about people who use heuristic way to solve problem. Changes in referee can have big impact on such strategies and follow changes in referee much more harder in this case.

Same as strange (on my mind) damage calculations. I don’t get it, really. Just make a quick look at it and decide implement only easiest case and easiest calculations. In rules says - “damage performed”… And by code I saw that this damage logic spread in a lot of places (especially I don’t get this trick with zero damage, why it is needed, and why it is implemented so complex).

Anyway, as I said, it is good enough, and of course nice to have it. Definitely it is good improvement for contest.

I’m pretty sure all the 0 damage code was there to show explosions in the replay viewer.

As a reminder, before Gitc, the referee code was not public. Everything was the same (bugs in referee, bugfix later during the contest, hidden rules/behavior …).

Now we have the referee code. Everything is the same. But we can check it by ourself.

We don’t ask Codingame to make a perfectly readable referee. And we don’t want to. At the beginning, the asked feature was to have the referee code published without any additional works from codingame. If they have to spend time on the referee to make it readable, i won’t complain, but it is not what we asked.

And yes, the 0 damage was here for the viewer data. You should simply ask me on the chat, i could anwser you on that

Ended 35th with a Genetic Algorithm, implementing the same kind of things as previously mentioned (GA opponent, then myself, evaluate health, distance, nb of ships, …).

I was able to do around 100k simulations/turn, but that wasn’t that useful. I guess it was not that good to find the best action against only one possible move of your opponent.

A little thing I haven’t seen yet : My FIRE gen was neither “FIRE X Y” nor “FIRE TARGET”, but just “FIRE”. The program had to find the best possible target by itself (closest, …). A bit

of heuristic in the GA that I think leads to a smaller and less random search space. Don’t know if it is a good idea, I just saw that I missed as often as the others

+/- :

- The game was really nice : perfect difficulty and fun. I especially liked the hexagon cells which were very refreshing, and the ships placed on 3 cells which lead to a nice boat-like steering behaviour.

- Having the referee is nice, it helps understand the game, and players that want to simulate but don’t have enough time can use it.

- It was difficult to make a difference : a lot of IAs were able to dodge most of the cannonballs, and often the games were just ships running around, avoiding mines and waiting for the end. There doesn’t

seem to be any difference between ranks 30 & 100. It may have been avoided with more powerful weapons, like faster cannonballs or area damage ? - I found the graphics not as beautiful as in previous games, and they lacked clarity : for example it was hard to tell the difference between mines and barrels. And the score panel took a big part of the width.

Maybe the game window could be bigger too ?

Great contest, thanks CG.