Legend #37 Ruby

I should not read PMs before writing mine.

I felt like I just played the game where everybody hacked the game  .

.

The contest dates was like last year not the best for me and could dive in only the last week.

I started with a really basic bot the first day that went to silver:

- Phase 1: A first dive straight down and up activating sometimes the light

- Phase 2: Next dives, one fish at a time using the radar (crab movements!)

The critical point was to avoid monster. And I spent too much time on it for putting off doing the right thing until the last moment as I did not want in the first place to dig the referee.

On top of that, I first misread the statement about emergency mode and did not take into account that it can happen “during a turn (so not necessarily at the end of the turn)”. i loose quite a lot of time trying to add some margin to compensate something I did not understand!

Test 1

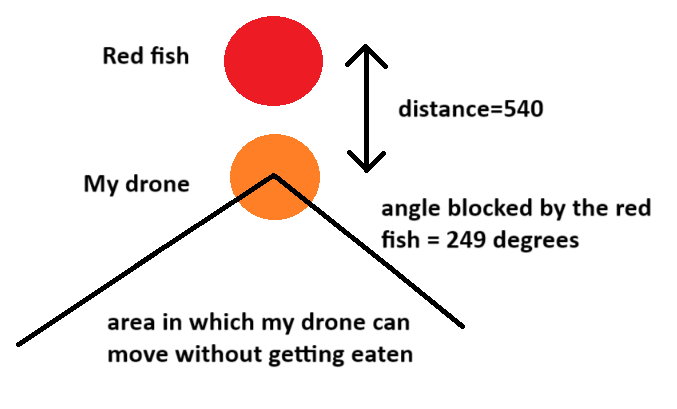

I finally grab the getCollision method and use it with at most 2821 reachable points against the concerned monsters minimizing the distance to destination.

Ok, something working, but that was not enough, with a lot of monsters, I was too often sandwiched between.

Test 2

The silver boss seems impressively strong. For sure, with my basic bot as base, it began to avoid monsters but without scanning fishes.

During debug of monster avoidance, I took advantage of replay analysis to add some quick “if” to make useful actions in particular cases.

For example, to push fishes out of the map during the dive of my first phase.

Test 3

For my sandwich problem, I fixed it by looking a second turn ahead assuming all the monster will target me. That could certainly be improve like ignoring monsters out of my range but did not thought of that at the moment.

Because 2821 * 2821 was too big (at least in Ruby), I reduced my set of points to 976 for the next turn and added another 192 points for the second turn.

Test 4

Again, first play in the ide (after so many for debugging :)) result in a loss against the silver boss. But my drones were alive during the whole match, even with 6 monsters, youhou !

Need to stop that crab movements. I went for the center of the rectangle as many of you. Did not think to update it from the previous turn, so there is a fresh one each turn  .

.

Test 5

After some loses (but also some wins :)) against the boss and new “if”, I analyzed a game where one of my drone went into emergency mode: a face to face with a monster is lethal  .

.

But I saw that monster before and the game was getting more and more interesting now that the mechanics are well understood… just f***, went to the referee again and grab more methods: updateUglySpeeds, snapToUglyZone, updateUglyTarget, getClosestTo.

Now it was to detect:

- the light of the opponent => easy.

battery < battery_prev

- emergency of the opponent => not that hard. I thought the trick

battery == battery_prev was enough but it was one turn too late. I ended up checking the collision of an opponent to not miss a turn.

The end

And that worked 90% of the time  (wrong simulation could happen if missing a monster, plus I did not use symmetry). Added more “if” to release scans early and scan the way up of phase 1.

(wrong simulation could happen if missing a monster, plus I did not use symmetry). Added more “if” to release scans early and scan the way up of phase 1.

I submit my second arena last saturday morning, made it out with the silver boss, and reached surprisingly 50th gold. So no, that can’t be the end  (but it should have been as gold was my target after the strong silver boss)

(but it should have been as gold was my target after the strong silver boss)

Test 6

Now that the monster avoidance was operational (most of the time), time to dive more on my “if”. Added more actions in phase 2, and one in phase 1 that I think worked pretty well to stop surfacing if no bonus point to move fish out of map.

Test 7

My bot was pretty consistant and reach each time the top 10 of the gold league. Between small bug fixes/improvement last sunday evening I searched for the modification that could be done in time, giving me a significant boost and will not break my astonishing sequence of solid “if”.

And I saw that improvement of bypassing monsters more quickly. I was minimizing the distance of the next turn causing my drones to first get in front of the monster to finally turn back around it, losing too much turn.

As I was already somehow looking for the second turn, I “just” had to check that distance instead.

To make it worked and not timeout, the number of points tested has been drastically reduced: from 976 * 192 to 60 * 60.

I reached top 1 gold but with >1 difference.

The real end?

Almost, looking at some replays reveal that moving fishes out of the map no longer work very well… what? Why?

=> Minimizing the second turn distance should not be use if the target point is less than the drone speed… pfiouuu

Top 1 again (above the boss during the run). Gone to sleep as nothing good would have been done. The good surprised to woke up lucky pushed in legend

Conclusion

My bot consist of two phases, with 8.5 and 4 actions each where the monster avoidance is run to track/update and prevent emergency. The light is turn on every two turns on some actions of phase 1 and when needed on phase 2.

It was built with 10 arenas, ~200-300 play in the ide and analysis.

And it lack a lot of features:

- do not use symmetry

- do not optimize rectangles

- do not track fishes

- do not do perf, timeout sometimes

- do not take into account opponent score and scoring system (in particular to surface)

- do not coordinate well both drones resulting for the same action sometimes

- do not test against itself in local play

- do not remember previous action resulting in some cases to really fun replay

Bravo!

Congratulations to the winners. Reaching legend is a thing, compete with those guys is another  .

.

Many thanks to Codingame

As always a great contest. I really enjoyed the game. The stats of total scanned fishes and emergency drone was really cool, why not keep them in the multiplayer section?

I also notice a change when scrolling the debug information panel (or was already like that before?). The viewer play the turn only when reaching the end of the log turn: really appreciated that change to debug.

See you in winter?

(this is a reply to the post above, I clicked the wrong reply button)

(this is a reply to the post above, I clicked the wrong reply button)