Hi all,

Despite being perfectly happy with my current #1 place at the top of the OOC leaderboard, I wanted to evaluate whether a different ranking system would provide the same results. I present below the results of my analysis.

The analysis is based on the same data that was generated by CG during the rerun. If a player had a lucky streak during the re-run, that player’s score should similarly be inflated in this alternative ranking. No CG servers were harmed in the process

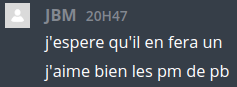

Observed winrates

The figure below shows the observed winrates during the re-run between pairs of players.

It is well known that CG picks opponents within a ±5 rank region, hence the data is grouped on a diagonal axis.

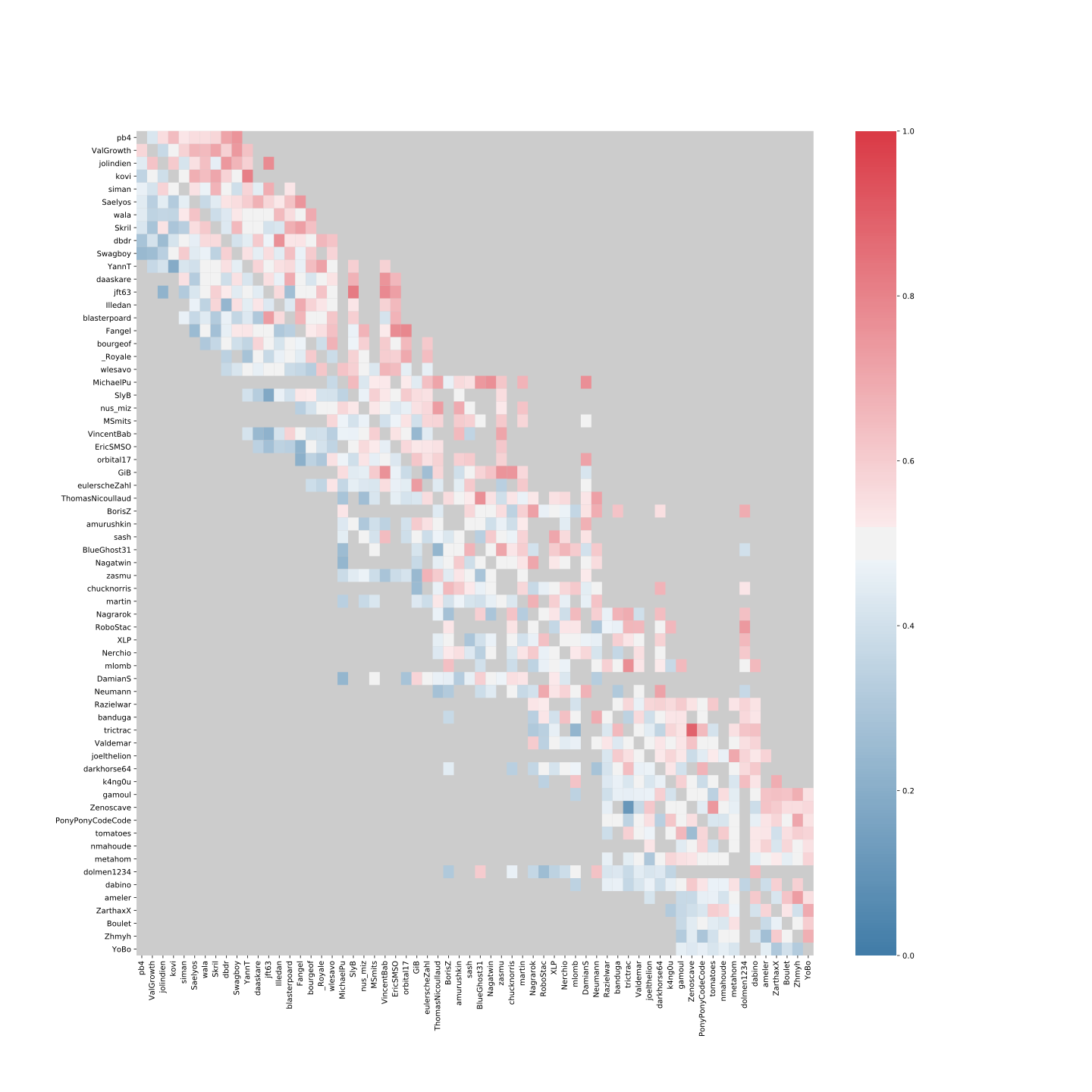

Expected winrates

I do not intend to go into details on the method in this topic (PM if interested !), but I have applied a technique which reconstructs a score for each player from the observed winrates shown above.

It is possible to derive an expected winrate between two players from these scores.

The figure below shows the expected winrate between all pairs of players within the Legend league.

One observation I find interesting from this figure is the four zones that are relatively clear cut.

- There is a homogeneous 4x4 square at the top left : the top 4 was very well matched.

- Then comes a second group of 20 people also very well matched down to wlesavo.

- Then comes a third group with 40 people, relatively homogeneous.

- And finally comes YoBo, in a league of his own (sorry

)

)

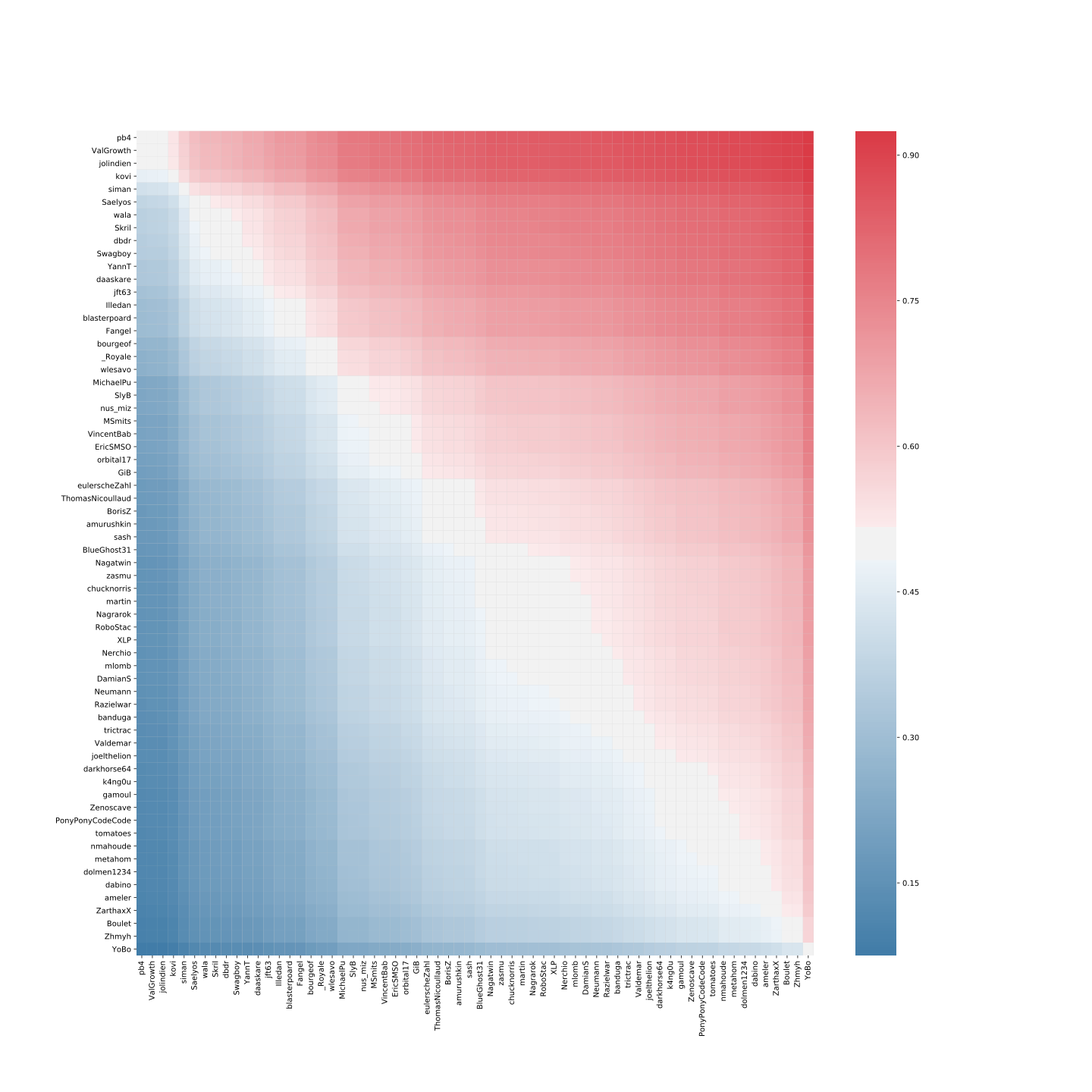

Upsets

So… who pulled you down the ranks ? Who pushed you up ?

Here is a list of the biggest upsets in decreasing order of importance.

trictrac won 88 % against Zenoscave, he was expected to win 53 %.

jolindien won 62 % against ValGrowth, he was expected to win 49 %.

jolindien won 41 % against siman, he was expected to win 57 %.

jft63 won 26 % against blasterpoard, he was expected to win 52 %.

eulerscheZahl won 33 % against zasmu, he was expected to win 54 %.

pb4 won 64 % against kovi, he was expected to win 53 %.

siman won 39 % against Swagboy, he was expected to win 57 %.

Zenoscave won 74 % against tomatoes, he was expected to win 50 %.

jolindien won 60 % against kovi, he was expected to win 52 %.

Neumann won 36 % against dolmen1234, he was expected to win 57 %.

ThomasNicoullaud won 76 % against BlueGhost31, he was expected to win 52 %.

pb4 won 42 % against ValGrowth, he was expected to win 50 %.

Saelyos won 37 % against wala, he was expected to win 51 %.

ameler won 72 % against Zhmyh, he was expected to win 53 %.

jolindien won 45 % against Skril, he was expected to win 63 %.

daaskare won 69 % against blasterpoard, he was expected to win 54 %.

mlomb won 77 % against trictrac, he was expected to win 52 %.

Fangel won 30 % against Illedan, he was expected to win 49 %.

bourgeof won 67 % against wlesavo, he was expected to win 51 %.

gamoul won 34 % against tomatoes, he was expected to win 50 %.

Skril won 40 % against jft63, he was expected to win 55 %.

kovi won 68 % against Saelyos, he was expected to win 59 %.

BorisZ won 34 % against chucknorris, he was expected to win 53 %.

trictrac won 35 % against darkhorse64, he was expected to win 52 %.

Skril won 65 % against Swagboy, he was expected to win 51 %.

Zhmyh won 68 % against YoBo, he was expected to win 56 %.

GiB won 26 % against eulerscheZahl, he was expected to win 52 %.

Fangel won 33 % against blasterpoard, he was expected to win 49 %.

sash won 33 % against BlueGhost31, he was expected to win 51 %.

Nagatwin won 70 % against Nagrarok, he was expected to win 50 %.

Or, with a visual presentation :

Thanks to @eulerscheZahl for providing the data to analyze

Ciao !

@JBM: it’s coming…