When I try to grow a spore at the first turn, it fails with an error message: ((23,1)) is not to the E of ((0,1)). Is it a bug?

Hello, this should now be fixed.

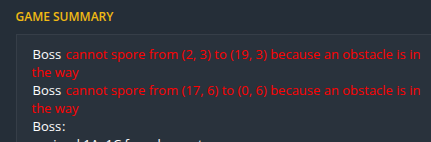

Hello, you cannot spore there because a wall is in the way. The error message is wrong, this is now fixed:

So, do someone know how to fix my problem ?

The game input occurs such that initially ,the input specifies all initial placement of objects. And later input (after your output) describes changes in the environment (like a cell which was blank earlier has been converted to a organ) If you want to avoid conversion of walls you will have to declare the container( set /list or whatever you use) which stores the walls before the while True: loop of game , so that the data that you initially obtained about the walls doesn’t get lost.

I really enjoyed this challenge—thank you to the CodinGame team for continuing to make it happen!

I finished 171st, but there’s nothing particularly interesting about my strategy.

My code consists of a mix of closest pathfinding functions combined with various heuristics. Each path has several characteristics that are used in the heuristics (e.g., eating a protein, being able to harvest a protein, posing a danger to the enemy, etc.).

The strategy is as follows: first, perform defensive moves; then, execute attack moves if there are “enough resources/income”; otherwise, focus on farming moves.

GG to @wala for the usual top 1 spot with Java (it was close, @Zylo)!

I finished 99th. Thanks a lot for letting me finish in the top 100 ![]()

Here is my PM : My post mortem for the codingame Winter Challenge 2024 | Yann Moisan

Hi all,

I ended up Legend #32. Felt good to get to Legend after several contests being unable to do so.

I want to thank to Codingame for hosting another great AI programming contest. I’ve been playing them for lots of years now and still eagerly await for them and really enjoy playing them ![]() . This contest was particularly interesting and well crafted, with good possibilities to very different strategies, be it heuristics, simulations or NN. Congratulations to the creators and keep this contests coming !

. This contest was particularly interesting and well crafted, with good possibilities to very different strategies, be it heuristics, simulations or NN. Congratulations to the creators and keep this contests coming !

Congratulations to reCurse for winning and MSz and aCat for completing the top 3. Your bots are amazing, I really enjoyed watching some games by them. I would have enjoyed it more if they would have been more time to watch them though ![]() . I still don’t quite understand the need to only join in the last hours of the contest, but still…

. I still don’t quite understand the need to only join in the last hours of the contest, but still…

I originally tried using Smitsimaxi (Decoupled UCT) but couldn’t make it work since the branching factor is so high and the potential actions depend on the state of the game. I ended up implementing a beam search of width 5 and depth 3 for each organism. I don’t simulate the opponent.

Here are some details of my implementation:

Actions

- I divide the actions into “walk”, “spore” and “non-walk”.

- Walk actions are non-spore actions to get closer to some protein or to the rival. They usually would be basic, but could be tentacles, harvesters or sporers depending on available proteins.

- Spore actions could be either just spore from an existing sporer, or a grow_sporer + spore combo which uses 2 turns. Non-walk actions are either harvesters that harvest some protein or tentacles that attack a cell with dist to rival <= 2.

Pruning

- I simulate every available non-walk action, but only simulate the most promising 2 “walk-towards-protein” actions, 2 “walk-towards-rival” actions, 2 “spore-towards-protein” actions

and 2 “spore-towards-rival” actions. So at each node expansion of the beam search I simulate 8 + N actions. - The heuristics for determining the most promising nodes is based on distance to rival and distance to most needed protein sources.

Eval

- 1 point per organ

- 0.5 points per unoccupied cell closer to player (voronoi style Voronoi Diagram - GeeksforGeeks)

- 0.35 points per protein

- Points per harvester. They decrease incrementally as the number of harvesters of the same type increases, following a logarithmic decay function.

- Points for being close to protein sources.

- Points for attacking cells close to the opponent.

- Big penalty for not having enough harvesters to allow me to grow any kind of organ (no A and no any pair of B/C/D).

- Penalty for distance to closest protein sources that I have no harvesters of.

Note: Points for harvesters and being close to resources decay over time and are lower the more proteins of that type I have.

Data structures and algorithms for efficiency

- Every cell knows if it’s being harvested by any player, attacked by a tentacle of any player, the distance to the closest cell from both players, the neighbors in every direction.

- At the beginning of each turn I run an initial bfs from every cell to calculate distances between any two cells, the predecessors in the paths of any 2 cells and the heuristics

score for closeness of proteins and opponent organs used in the action pruning. - For each organism I keep track of the frontier (the unclaimed cells dist 1 from any organ of the organism).

- I create every object I will need in the first turn. I have an action pool and a beam node pool.

- I use only 1 game state instance to run simulations.

- I keep track of the modified cells in each simulation to rollback more efficiently.

Thanks again and see you all on the next one !

thanks for the detailed PM, can you indicate roughly how many turns you managed simulate in the 50ms time limit?

#7 Legend

I started by messing around with deep searches, trying to figure out how deep could I go if I got creative with pruning. The result of that was a beam search that could on the first turn easily get to depth 20 with width ~3000 on maps with a lot of walls (often under 200ms), and at least figured out where I should start putting my roots on the more open maps (~depth 8). The heuristics I used to prune were:

- limit to 4 organisms; after growing the 4th one, the bot stops following the beam

- every sporer has to make a root immediately

- limit sporer and root options to 2-3 most promising ones per organism

- after growing a basic organ, the organism has to build something on the newly-neighbouring squares next turn

- use only basic organs to move, and retroactivaly change them to other organs if I run out of A

- prune non-optimal sequences with harvesters (that lose resources compared to options that build harvesters sooner)

The nodes were evaluated based on the resources, the distances between the roots, and their position relative to the opponent’s first root.

The bot follows the results of the beam search until it meets with the opponent, possibly inserting other moves found by my other algorithm if they don’t interfere with the beam.

I wanted to keep looking at deep searches for 2 players with similar (or even more aggressive) pruning, but unfortunately I got sick a couple of days into the contest, so I gave up on time-consuming apporaches and switched to a “simple” depth 1 bot. I chose to use genetic algorithm with 2 populations - 1 population per player, 1 gene per organism, evaluation by simulating them against each other and rewarding destroying/threatening organs and quantity of resources (and distance to the ones not being harvested). I thought this algorithm was just a meme (I saw it in another postmortem), but surprisingly it could have worked somewhat well. Its biggest problem was that in rock-paper-scissors situations, which were rather common, it kept switching between the options every couple of generations and often chose some of the weirder ones - which I fixed by having one last “generation” of all the unique winners of the previous previous generations.

The vast majority of my bot’s losses were due to the beam search coming up with a specific solution, which the genetic algorithm forgot as soon as it took over, not grabbing space early on (because I would need to tune my beam search’s eval based on the characteristics of the map), and my genetic search’s eval being quite bad at evaluating fights taking more than 1 turn.

My beam search is only depth 3, so I simulate only 3 turns ahead. My eval function is costly because I run a bfs, so I only perform between 500 and 1000 node evaluations per turn. I probably should have followed blasterpoard approach with a simpler eval function, more depth/width, and different algorithm for figths, but I used the same algorithm for everything.

Thanks everyone for this contest!

Legend 39th here with JS and here is my PM of my bruteforce heuristic AI Winter Challenge 2024 - Cellularena - Apofils PostMortem - Legend 39h - JavaScript · GitHub.

I hope it can help Gold players to have new ideas or to improve their bot to get to Legend!

GL HF!

Thanks for the PM! Quick question: in your experience, is the object pool much faster compared to creating objects on the stack (assuming the objects are of reasonable size, of course)?

Object creation is time consumign yes, so having a pool improves performance. I never benchmarked by how much, but it’s significant. You can use the first 1s turn to create every object you will need, and then reutilize them.

Hello everyone !

I finished as Legend #48 (in JavaScript) ! I joined the competition for fun, with no particular expectations, so I’m thrilled to have made it this far. Competing against my brother my brother was a big motivator, pushing me to keep improving right up until the final days ^^

Big thanks to Codingame for organizing this challenge, and congrats to the winners!

I wasn’t keen on using genetic algorithms or Monte Carlo approaches because of the huge number of parameters, interactions and moves to evaluate each turn. Instead, I focused on basic one-turn strategies, which I refined progressively until the final day.

In the first few days, my bot was overly aggressive and quickly ran out of resources, especially on maps with limited proteins. So I improved its protein-harvesting strategies and added defensive actions, like placing tentacles at bottlenecks on the map.

Taking actions

One of my biggest challenges was figuring out how to prioritize actions across multiple organisms while ensuring distant organisms didn’t take resources needed by those engaged in combat.

I end up breaking up the strategy whole in small parts and optimize them separately. Each turn, I find some candidates organisms for each type of action, then go to the next action with available organisms and so on.

- Close Defense

- Two-Cell Defense

- Identify Bottlenecks – Secure progression using tentacles.

- Spawn New Root

- Harvest Proteins

- Create New Sporer

- Find Missing Proteins – Pathfind for necessary proteins.

- Cherry-Pick Proteins

- Expand – Maximize cells reached first.

- Consolidate – Expand to free cells if no other organisms have valid moves.

- Finish – Expand to all free cells, even if proteins are harvested.

- Wait

Final Days

In the last day, I squashed some obvious bugs, ensured no two organisms targeted the same cell, and experimented with different action orders. For example: should I prioritize cherry-picking proteins or expanding first?

Introducing Cherry-Pick and Identify Bottlenecks boosted my bot into the top of the Gold League. I made it to Legend on the final night of the challenge!

@Apofils, thanks for your detailed PM! I’ll definitely give visualizer and Psyleague a try for the next challenge.

Bye

#5 Legend

- Find the best d-depth plan. The list of possible moves at each step is pruned to the most promising ones. Assume just one move per turn and the opponent does nothing. d==4 first turn, then later 1<=d<=3 (mostly d==1).

- With the plan(s) from the first step locked in place, keep repeating the first step, greedily adding moves/plans.

Most of the logic was in a complex board evaluation function that involved ~20 different factors. Some which I found most important:

a) # of squares controlled (positive factor): each square is proportionally owned by its distance to each player (~1/distance^3). Then the overall weight of this factor is adjusted depending on the map and income/resources. More closed off maps → higher weight. More income/resources → higher weight.

b) Vulnerabilities left behind (negative factor). I had 4 different types of vulnerabilities. For the first 3, eval is done after the moves are processed:

- d1-defendable (a square the opponent could use to attack next turn but it’s “blockable”)

- d1-undefendable (unblockable version)

- d2 (one square away from the above)

- d1-defendable-now (this uses the start of turn board position. squares that both players could build on/attack from right now)

There was lots of fine-tuning, where the relative value of everything is highly dependent on other variables. From parameter sweeps, my program found the C resource to be by far the most important one, which is interesting.

I found the game to have a lot of strategic depth with a very high skill ceiling. Reminds me of go, with the local and global characteristics and large branching factor. Congrats to reCurse and the rest of the top 3 for creating such impressive entries and showing what high level play can look like in this game.

#16 Legend

I described my approach here : CG's Winter Challenge 2024 - Neumann PM · GitHub

Congratz to the winners and thanks CG <3

#14 legend

After skipping some number of contests in almost 4 years, I finally got simultaneously free time and game type that I adore: cells, simple rules, no physics. And the game delivered, I enjoyed it very much.

In short, my bot generates some positions after 1 move, estimates them with an estimating function and picks the best several times.

In long:

-

Generate all possible not pruned single organism actions for both players. List of some pruned actions: tentacle to wall, tentacle to parent if some other non-wall neighboring cell exists, harvester at nothing if neighboring protein cell exists, … Total number of actions can go 800+ in heavy situations.

-

Estimate all generated actions against the waiting opponent, sort by estimate.

-

Pick top N my best actions and top N enemy’s best actions, generate and estimate all NxN positions when both actions were taken.

- Do it not row by row, but in a specific order such that if we reach a timeout then we will be left with a smaller n x n or (n+1) x n set of payoffs of top actions.

-

Perceive evaluations as payoffs for normal form game NxN (which it is actually) and solve it with some algorithm for normal form games (I just copy pasted my implementation of CFR+ algorithm from Xmasrush 6 years ago). As my estimating function is not perfect, each player picks action with the highest probability instead of sampling according to probability which would be done in the theoretical perfect case.

-

Fixate 1 picked action for me and 1 picked action for the enemy. Now we will try to find the 2nd action for me (if I have the 2nd root). Exclude from the list of not pruned actions ones by used organism and run simulations and estimations as in stage 1. Add the best action to my move.

-

We have 2 picked actions for me and 1 action for the enemy. If we have 3rd root, find the best 3rd action similar to stage 5.

-

Output up to 3 found actions and waits for my other roots (some found actions could be waits too).

Also I have an endgame detection mechanism (when the field is partitioned in 2 parts or players stuck in a tentacle loop) which circumvents aforementioned stages and picks top not waiting actions if number of turns left <= number of cells left to fill. On the last day I saw even more advanced endgame strategy from top bots: sometimes killable opponent cell should not be killed immediately and instead should be killed in the last turn so the opponent will not have time to refill emptied space, but didn’t bother to implement it myself.

A few hacks with estimating function:

-

I will call a cell defenselessly killable by the opponent if some adjacent cell is not attacked by the player and attacked by the opponent (and the opponent has resources to build a tentacle). Also all descendants of such cells are defenselessly killable too. During my BFS such cells do not emit waves, so their territorial influence is not counted.

-

During BFS all sporers fire in all possible cells on the first wave (if the player has enough resources and some extra resources to not be left with 0 proteins of some type). This allowed my bot to build meaningful sporers without ever simulating 2 moves of real game state, but often it started to just build many sporers to stay with potential and never shoot. This forced me to add a bonus for 2+ roots in the estimating function which partially solved the problem.

-

To prevent dying on a map with scarce resources I devised the following. I will call a player sustainable if he harvests A protein or any 2 of B,C,D proteins. When estimating a non-sustainable state I roughly calculate “moves to sustain” - number of moves to get into a sustainable state, and subtract it with some weight from the estimate. With distances to the closest proteins (you will need 2nd closest for C and D also) already computed by BFS it is possible to calculate “moves to sustain” with a long sequence of ifs in O(1) time (without regard that actual grow action may decrease more than 1 closest distance). And if “moves to sustain” > number of possible organs from mined proteins, then the estimate is heavily punished.

My bot exceeded my expectations, I thought it would end at the bottom of legend, since I simulate only at depth 1 and can’t see optimal many-moves structures which could be seen with something like beam search. I was really surprised with the low number of simulations + estimations per turn that my bot can manage, it was around 1000-3000. I expected something closer to 100k since the size of the playing field is only around 200 cells. Probably I should stick to floodfill instead of bfs or dive into bits magic to store each horizontal line in bits of int32.

Codingame team, thanks again for a great contest!

#24 Legend

Postmortem:

Cellularena PM - thebitspud (Rank 24, Legend)

gg to everyone who participated!