I’d be interested in the interface you use between your C++ game simulation and python. Do you use batches for better performance? Any specific libraries/frameworks you use?

My approach is to have objects as small as possible, avoiding dynamic allocations as much as possible. So my object creation is quite fast. I guess I should benchmark both approaches…

Yes the interactions happen over 512 games at once as I mentioned in the PM. I use pybind11 for exposing C++ as a Python module.

you said you use one beam search per organism, are they independent? in what order do you process each root?

I sort them by proximity to rival organs to prioritise attack/deffense. Mainly attack really since I don’t simulate the rival…

#3 Legend, C++

Congratulations and Acknowledgments

Congratulations to @reCurse for a win, @MSz for the runner-up, and @marwar22 for holding the first place for a long time.

Separate congrats for top-performing representatives of our school: @MSz @marwar22 @gaha @Zylo

Contest timeline

The timeline for this contest is simply a cruelty. Too long, and during the holiday.

Humans (and cats) really deserve some break and not making their only regeneration time during the semester ruined by a coding marathon.

Each year, I wish I had the will to boycott the event, and each time I fail to do so.

Game

Excellent game design.

In terms of AI, it was, of course, awful: multiaction with a variable number of actions, simultaneous moves, huge branching factor, and requiring long-term planning; but yeah, it was challenging to deal with.

Approach

Search

A combination of beamsearch and greedy choice depends on the depth.

Greedy evaluates all generated actions, chooses best, applies it and back to generation, until no improvement or given action depth reached.

There are separate beamsearches for each turn depth, operating on an action-depth level.

Beamsearch uses global and local beams, I remembered the idea mentioned in our Hypersonic paper with DomiKo et al.

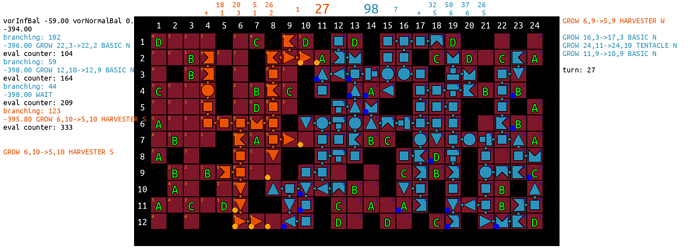

My max reachable depth is set to 7 - if I allow my bot to search deeper the results are worse. ![]()

Opponent prediction

-

Beamserach for depth 1

-

Greedy for larger depths

Evaluation

Classical hand-crafted evaluation, linear combination of features, symmetric (zero-sum).

Main features

-

organism size, bonus for the player with a larger organism

-

closer Voronoi cells, bonus for the player with a larger area

-

resources and income (values for first,

<=n, more). There is no decisive answer as to which resource was the most important; weights that worked for me seemed all around the place. -

threats: penalties when the opponent organism is near and I don’t have my cell guarded by a tentacle, large penalty when the spot is already guarded by the opponent tentacle

-

endgame fill: bonus Voronoi-based score after some predefined turn number

-

plus some other minor weirdo things

Scoring

Score at each depth was s = previous_score * γ + current_depth_score (γ<1), with additional depth-dependent weight for each level.

The score for the final choice is s/number_of_visits + α*initialState_score

So, my scoring formula is rather overcomplicated, but I couldn’t find anything simpler without the loss in performance.

Move generation

-

sporer only if a predefined number of cells before is empty; the opposite cell has to be wall/opponent

-

roots not adjacent to my cells, limit to 7 roots

-

harvesters only when targeting unharvested resource

-

tentacle only if a cell is close to the opponent

-

plus other rules if I cannot afford basic

Visualization

Python script producing images based on the agent’s output.

My PM:

I’ve been working on the critical objective border cell calculations and pathing as described in your Potential Improvements in your PM, for weeks before I even saw this post. Thanks for providing the seed. I ran it offline and determined that my paths to those objective(s) needed improvement. My CanReach calculation for a given bank on turn 0 is easy enough. Obviously, the cell in the center just needs a Tentacle to control >50% of the board and have enough proteins left to harvest/backfill. Example, a path to that cell only absorbing what is necessary is a distance of 19 (w/o sporing). Two distinct paths exist at dist of 16 w/o regard to absorbing at all. One of them is better with at least 1 spore, reducing dist to 11. The calculation given bank of 3,3,10,7 -1 tentacle -1 spore -1 root is dist=10. Which still appears unreachable. Adding back in the absorbs on the path of 3D 3B 3A gives 13 which is plenty. Then determining if backfill is possible with the remaining protein bank is possible with C at dist 1 and D at dist 1 w/ 2 harvesters. The combinations seem all highly complicated, but the generalized path to the bordercell that Guarantees victory exists. And doesn’t require any kind of full tree search for it. There is a scenario where C at 9,1 is harvested along the way, further reducing the backfill requirement. This gets worse when there is more than one critical border cell, but establishing that closest first approach appears the best. This breaks down completely when it can’t reach even the first one. My testing on other seeds with 2 unconnected bordercells seems to require some reevaluation because the enemy can choose to harvest, defend near the closest cell, and expand further into ‘your’ territory beyond the secondary. Any map with >1 connected bordercells still seem to completely benefit from this kind of turn 0 decision-making imo. So great job on your visualizer! I’d like to figure out how to do it correctly.

In my attempts with reinforcement learning, I found that the rewards need to be extremely meticulous and precise. However, genetic neural networks can circumvent this difficulty. Have you ever tried genetic neural networks? Do you think their performance can compare to RL?