I ranked 3rd, which is the best ranking I’ve ever had in a CodinGame challenge!

To be honest, this was one of my favourite contests (and I don’t say that only because of my very good ranking  ). The rules were simple and easy to understand, and the game was interesting! I think there were only two minor issues: maybe the game was too difficult to enter (even if it was definitely not a problem for me), and a lot of games would end up with a tie.

). The rules were simple and easy to understand, and the game was interesting! I think there were only two minor issues: maybe the game was too difficult to enter (even if it was definitely not a problem for me), and a lot of games would end up with a tie.

At the beginning of the contest, I first decided to use a negamax with a simple eval, because I thought it could work pretty well. I managed to go up to mid-gold with this negamax.

After this, I saw that MSmith was ranked 1st, and remembered reading his article about the Smitsimax, so I decided to implement this algorithm. I just had to copy-paste the implementation I use for Coders Strike Back, and tweaked it to make it compile. I was really surprised with the results I got, because it was better than my old AI on the first try. After a few bug fixes and optimisations, I was finally able to enter the Legend Ligue. Then, I finished to code the last features, correct the last bugs, optimize the code, and tadam! I ranked 3rd  .

.

My evaluation function was really simple: I only took into account the number of quests each player has completed.

My exploration was depth 4 (4 actions per player).

I thought I should have started to code earlier, because at the end of the contest I still had a lot of things to do: I didn’t have the time to check the correctness of my code, nor to change the exploration coefficient, I let it to sqrt(2). What’s more, my code wasn’t really fast: I was only able to call the exploration function about 10k times a turn.

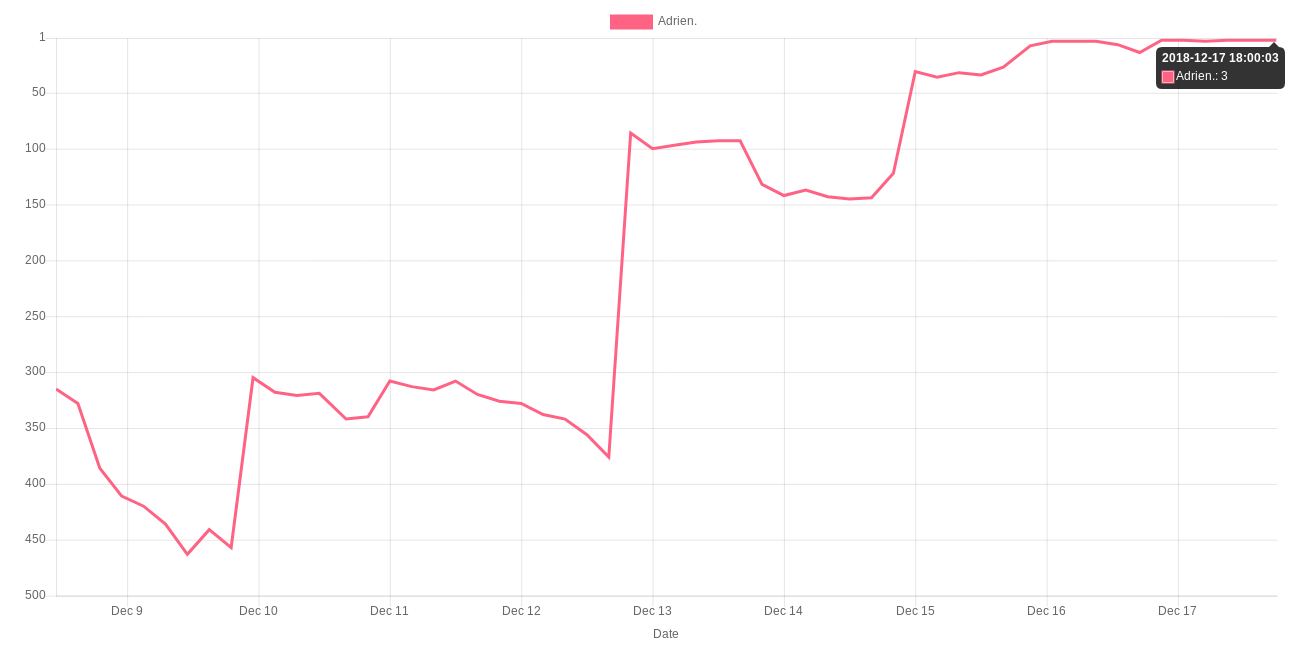

Here is the evolution of my ranking:

Merry christmas everyone!