Hello everyone, great contest  I ended up 23rd with a genetic algorithm.

I ended up 23rd with a genetic algorithm.

Metagame

Because of the huge amount of rules in this game, I figured out very late what I think the “correct” metagame is.

Given that it’s quite hard to actually hit a cannonball or a mine, many games end up with ships running out of rum over time, which means whoever has the ship with the most rum has a great advantage.

With that in mind, I made up 3 eval functions for my GA:

- If there are still barrels around, pick up barrels

- If there are no more barrels and I have the ship with the most rum, sail in circles and try not to get hit

- If there are no more barrels and the other player has the ship with the most rum, focus all my energy on trying to hit this ship, and this ship only

Maybe the third case deserves a few more details:

- All my ships had a reward for being in front of the bow of the target ship

- I added an extra reward if the speed of the target ship was zero, which encouraged my ships to collide with it

- I only allowed my ships to fire at the enemy ship with the most rum, and totaly ignored the other ones

Simulation

I didn’t feel like implementing heuristic rules to avoid being hit by stuff in the game, so I re-implemented the referee in C. In the end I could run between 150k and 300k simulations of turns in ~40ms, which I believe is more than enough for this contest.

Some people wanted to have a look at the implementation, so here it is: link. (It compiles and I believe for now it makes your ship shoot at themselves, but I don’t have the IDE to test it  )

)

Genetic Algorithm

Nothing outstanding to say here, I had a depth of 5 turns, a population of 50, 50% mutation and 50% crossover.

The evaluation of the fitness was the sum of the fitness for the next 5 turns, with decreasing weights over time.

At each turn I used the best solution from last turn as a starting point, gave myself 5ms to make my ships move, then 10ms for the enemy ships, then I dealt with connonballs (see below), and the remaining time (somewhere around 20ms) to make my ships move and fire again.

Firing

Ok, so, that’s the one idea I’m proud of in this contest. But, spoiler alert, it didn’t work at all. I think I ended up with the worst firing algorithm of the top 50.

Still, I’ll spend some time explaining it

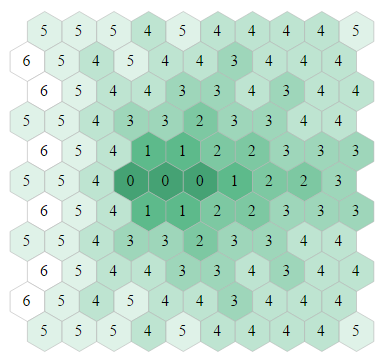

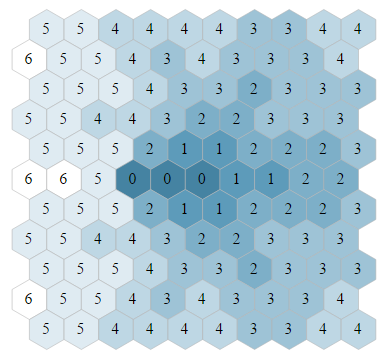

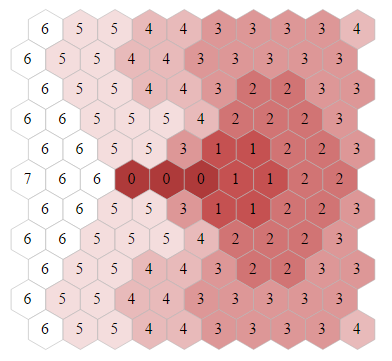

The starting point is simple, I wanted to quantify the gain of having a cannonball arrive at a given spot and turn, so that when I compute my fitness, I could take this gain into account when firing.

This way, one of my ships could change his course in order to make a better shot and maximize its fitness.

The other thing I wanted to achieve is to not shoot to hit, but shoot to minimize the fitness of the target. So a shot could force an enemy ship to slow down, get closer to a wall, etc. This way I could sort shots from a minor annoyance to a garanteed hit.

In order to do that, I listed all couples (coordonates, delay) that had a chance of hiting the enemy ship with the most rum, and for all these, I ran a GA with only 3 generations to try and find an escape route for the enemy ship.

The difference between the original fitness and the fitness assuming a cannonball was fired at a given coordonate+delay gave me the gain of firing at the said coordonate+delay.

The problem (I think) is that in only three generations, a GA often wrongly thinks that a cannonball cannot be dodged.

TODO list

Overall there are many areas in which my bot is lacking.

- The prediction of what my opponent will do is attrocious (he can’t fire or mine)

- I mine randomly

- My shots are way off

- I still get stuck in walls sometimes

General Feedback

Thanks again to all the CG staff for this really nice contest. As much as I enjoy reverse-engineering, I think providing the referee code is a very big plus.

Still, there are a few points I think could be improved in the following contests:

- There was no place for low efficiency languages here, I had to drop Python on day one in favor of C.

- Rules a bit overcomplicated: hew grid, collisions, three boxes per ships, ‘clunky’ move function, etc. I feel like some of it could have been removed. For example the fog of war was probably too much.

- Really hard to follow cannonballs on the replay screen.

- As marchete (brilliantly) pointed out, wood was really hard to pass.

- Legend boss arguably a bit to tough

See ya all at the next one!

I ended up 23rd with a genetic algorithm.

I ended up 23rd with a genetic algorithm. )

)

, how do you approach the testing part?

, how do you approach the testing part?