Feel free to send your feedback or ask some help here!

Don’t hesitate to check out the topic for the contest of the same name: https://www.codingame.com/forum/t/spring-challenge-2021-feedbacks-strategies/190849/22

Hi @Magus I’m trying to implement something similar to your contest approach (https://www.codingame.com/forum/t/spring-challenge-2021-feedbacks-strategies/190849/4)

I have several questions for you:

- I’ve implemented the MCTS and I’m using your rollout and expand strategies. But I have very difficult time beating even the lower Gold league bots. How many MCTS iterations you are performing? I’m reaching around 24000 on the first turn and around 2400 on the second, does that sounds acceptable!?

- How do you parse the players’ actions from the game turn input, in order to preserve the tree between turns? Whatever I try fails and I end up with new tree each turn.

- Where do you use MCTS evaluation you mentioned? Isn’t your rollout returning only WIN, LOST or DRAW?

My AI gives the number of expands+rollouts it did. So you can just play against my AI to have this information.

I just bruteforce all the possible moves of the previous turn. If I can’t find the corresponding node, it’s probably because my opponent played multiple moves (and I just waited). In this case, I just reset the whole tree.

At the end of a rollout, when the game is finished.

Thanks for answering, mate.

I’m currently in Gold League and I cannot choose you as opponent, but I saw several of your last battles and I think I found what I needed.

Excellent idea, I’ll rewrite my parsing algorithm.

So, you’re backpropagating this value up the Nodes to the root? Adding it to the evaluation of the Nodes for the player who started the playout!?

My back propagation is a classical MCTS back propagation. So yes, I back propagate the value to all nodes up to the root.

Yeees, I’ve reached Legend. I just needed two and a half more weeks after the contest

Here I’ve described how I got to Gold during the contest. After that I had a couple of days left and I wanted to implemented sequential MCTS, but the bugs kept stacking and stacking and I couldn’t get into the time frame of the contest, but I was determine to fulfil my ideas.

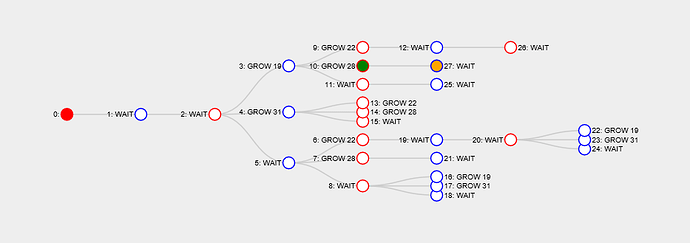

It was really hard for me to debug the big search tree, so I decided to invest several days to implement a visual debugger for the tree, using D3 js, which looks like this:

I’m backpropagating [+0; +0.5; +1] for lose, draw, win for the owners of the nodes:

This debugger allowed me to interactively observer each iteration for each turn of the MCTS algorithm:

This helped me to clear all bugs related to tree building.

I had big problem preserving the tree between turns, but after @Magus gave an advise (to try the moves for the children of the current root) I’ve got it right.

The other big nasty bug I was fighting for days was that I started to build the tree form the perspective of the the opponent…

So in the end apparently “little” things(and implementing correct simulation) cost me most of the time, but hopefully I’ll clear these things on the next contest

The lessons I’ve learned on the path of reaching Legend:

- Clever pruning of moves(for expand and rollout) is very important, here I’ve used @Magus’s strategy again. Even though MCTS is advertised to work well with big branching factor, to get in the 100 ms time limit it’s best to lower that factor.

- Pure Monte Carlo Simulation with UCB works really well(against Gold bots) and it could be used to debug the simulations and also to implement the MCTS, actually in my opinion MCTS is way better MC+UCB.

- Sequential(classic) MCTS could work pretty nice for simultaneous games.

Great game

Legendary

Finally got to legend too ! After hard work… It was worth it

It was my second contest and my first MCTS implementation. Took me a lot of time to properly understand and implement the algorithm, I’ll share with you my journey to (almost) proper MCTS.

During contest, I could only reach silver league due to a lack of time, my simulation was :

- buggy

- slow (only 50 rollouts average in Kotlin, yes, don’t laugh)

- No opponent prediction on expands (only in rollouts, in a sequential form)

- No shadow computing

The slowness was mainly due to the fact that I was also learning Kotlin syntax and I had the (very bad) idea to make abuse of the iterator’s fresh and attractive syntax.

Reaching top Gold

I managed to reach top Gold league afterwards with a bunch of optimizations :

- Get rid of all filter / map / iterators shit and get back to old fashioned but effective for loops and Arrays

- Optimized seeding with a precomputed neighboorMap

- Shadow computing

All those boosted my average simulations count to 1K, not so much apparently from what I can read but it was a big gap already here.

Reaching Legend

What brought me to legend was first fixing a SEED bug … (opponent seed capacity in rollouts was based upon my trees…), then getting up the ladder was mainly in my opponent prediction strategy

- SEED bug fix → ~200th

- Simple fact of doing a simultaneous action choice on rollout instead of sequential → ~160th

- Smoothing conditions for COMPLETE in both expands and rollouts (Decrease number of minimum required 3-sized trees over turns, instead of 4 until day 18) → ~120th

Getting to rank ~60th with decoupled MCTS Trees

My last blow was finally including opponent prediction in expand actions. First try on just picking a random possible action for each expand was really bad and counter-productive. I thought about sequential MCTS, alternating me/opponent action on each level but my performance being already barely good, I wondered if multiplying depth by 2 would really be a good idea.

So, I wanted to keep a simultaneous approach and choosed to build a tree for each Player, where selection and backpropagation phase would be done in parallel and expands whenever needed on each side. I had to do some changes for this to work :

- It was not possible anymore to associate a game state to a node, now being shared between two trees, so I had to regenerate the state from the start and update it on the selection phase each time a pair of action was selected (like if it was a rollout)

- I had to adapt selection phase so that every pair of action would be legal and on the same depth level, this means sometime a node is fully expanded but I don’t go deeper if other tree has still unvisited nodes on that depth

60 rank gained from that idea was really rewarding. I wish I could have a better performing overall algorithm so I could compare with other top ranked MCTS but I lack idea on this side, using kotlin, my optimizations are that kind :

- Using performant data class copy for my state

- I have a trees state array that hold 37 Ints, each Int is a bitmask for each possible state (1 for dormant, 2 for mine/his, 4 for size 0, etc…)

- I store trees number by size on state for faster calculating possible actions

- I only use for loops and arrays, mutableLists where I can’t do otherwise

- I have precomputed neighboor/shadow map

If anyone has any advice for better performance in Kotlin/Java, I’d be pleased to read about

@nmahoude maybe ?

Anyway thanks Codingame or such a cool event, it was really pleasant !

I also used Kotlin and tried at some point during the contest to switch to C++ and get MCTS working, but didn’t manage to get better play than my pure-heuristics Kotlin bot, which barely made it to Legend. What I find a bit confusing now is that the code that did well during the challenge is now timing out every game - has something bad happened to the Kotlin performance on CG?

This was a very fun challenge

Why count Win/Draw/Loss? Values {0.0, -1.0, -2.0}? Don;t backpropagate 0.0 (Win): what is the most common value (P(Win)>50%)?

I’m sorry Mathkute, but I don’t understand the question.

What is the strategy for the gold league ? I grew up to 6-7 tree but it do only 110 points max. How to improve that. I evaluate for complete:

score += (bonus[richn] + nutrients) x 10000000

for grow:

score = (g.trees_en[indt].size+1) x 1000000+g.cells[g.trees_en[indt].cell_index].richness x 1000;

for seed:

g.cells[index].richness x 10000

and 0 for wait.

I use minimax with depth 7 with alpha/beta pruning. It’s the better result.

HI, @DiMAsta!!

I want to know when you expand , the child are 4 (complete , grow , seed, and wait), or more complete 1, complete 2, seed 2 3, seed 4 2, grow 5, grow 7 like that?

Hi, I’m expanding in classical MCTS way, when I add children I choose one of them at random. Here is a gif of building my tree step by step:

How do you know the children, from the information given at each turn, or calculating by you.

I’m choosing several move, based on the current game state, and I try to use as less moves as possible, based on some heuristic, and for each move I create a child.